@@ -84,45 +81,43 @@ chat分析报告生成 | [实验性功能] 运行后自动生成总结汇报

## 直接运行 (Windows, Linux or MacOS)

-下载项目

-

+### 1. 下载项目

```sh

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

```

-我们建议将`config.py`复制为`config_private.py`并将后者用作个性化配置文件以避免`config.py`中的变更影响你的使用或不小心将包含你的OpenAI API KEY的`config.py`提交至本项目。

+### 2. 配置API_KEY和代理设置

-```sh

-cp config.py config_private.py

+在`config.py`中,配置 海外Proxy 和 OpenAI API KEY,说明如下

```

-

-在`config_private.py`中,配置 海外Proxy 和 OpenAI API KEY

-```

-1. 如果你在国内,需要设置海外代理才能够使用 OpenAI API,你可以通过 config.py 文件来进行设置。

+1. 如果你在国内,需要设置海外代理才能够顺利使用 OpenAI API,设置方法请仔细阅读config.py(1.修改其中的USE_PROXY为True; 2.按照说明修改其中的proxies)。

2. 配置 OpenAI API KEY。你需要在 OpenAI 官网上注册并获取 API KEY。一旦你拿到了 API KEY,在 config.py 文件里配置好即可。

+3. 与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

```

-安装依赖

+(P.S. 程序运行时会优先检查是否存在名为`config_private.py`的私密配置文件,并用其中的配置覆盖`config.py`的同名配置。因此,如果您能理解我们的配置读取逻辑,我们强烈建议您在`config.py`旁边创建一个名为`config_private.py`的新配置文件,并把`config.py`中的配置转移(复制)到`config_private.py`中。`config_private.py`不受git管控,可以让您的隐私信息更加安全。)

+

+### 3. 安装依赖

```sh

-python -m pip install -r requirements.txt

+# (选择一)推荐

+python -m pip install -r requirements.txt

+

+# (选择二)如果您使用anaconda,步骤也是类似的:

+# (选择二.1)conda create -n gptac_venv python=3.11

+# (选择二.2)conda activate gptac_venv

+# (选择二.3)python -m pip install -r requirements.txt

+

+# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:

+# python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

```

-或者,如果你希望使用`conda`

-

-```sh

-conda create -n gptac 'gradio>=3.23' requests

-conda activate gptac

-python3 -m pip install mdtex2html

-```

-

-运行

-

+### 4. 运行

```sh

python main.py

```

-测试实验性功能

+### 5. 测试实验性功能

```

- 测试C++项目头文件分析

input区域 输入 `./crazy_functions/test_project/cpp/libJPG` , 然后点击 "[实验] 解析整个C++项目(input输入项目根路径)"

@@ -136,8 +131,6 @@ python main.py

点击 "[实验] 实验功能函数模板"

```

-与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

-

## 使用docker (Linux)

``` sh

@@ -145,7 +138,7 @@ python main.py

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

# 配置 海外Proxy 和 OpenAI API KEY

-config.py

+用任意文本编辑器编辑 config.py

# 安装

docker build -t gpt-academic .

# 运行

@@ -166,20 +159,12 @@ input区域 输入 ./crazy_functions/test_project/python/dqn , 然后点击 "[

```

-## 使用WSL2(Windows Subsystem for Linux 子系统)

-选择这种方式默认您已经具备一定基本知识,因此不再赘述多余步骤。如果不是这样,您可以从[这里](https://learn.microsoft.com/zh-cn/windows/wsl/about)或GPT处获取更多关于子系统的信息。

+## 其他部署方式

+- 使用WSL2(Windows Subsystem for Linux 子系统)

+请访问[部署wiki-1](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BD%BF%E7%94%A8WSL2%EF%BC%88Windows-Subsystem-for-Linux-%E5%AD%90%E7%B3%BB%E7%BB%9F%EF%BC%89%E9%83%A8%E7%BD%B2)

-WSL2可以配置使用Windows侧的代理上网,前置步骤可以参考[这里](https://www.cnblogs.com/tuilk/p/16287472.html)

-由于Windows相对WSL2的IP会发生变化,我们需要每次启动前先获取这个IP来保证顺利访问,将config.py中设置proxies的部分更改为如下代码:

-```python

-import subprocess

-cmd_get_ip = 'grep -oP "(\d+\.)+(\d+)" /etc/resolv.conf'

-ip_proxy = subprocess.run(

- cmd_get_ip, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True, shell=True

- ).stdout.strip() # 获取windows的IP

-proxies = { "http": ip_proxy + ":51837", "https": ip_proxy + ":51837", } # 请自行修改

-```

-在启动main.py后,可以在windows浏览器中访问服务。至此测试、使用与上面其他方法无异。

+- nginx远程部署

+请访问[部署wiki-2](https://github.com/binary-husky/chatgpt_academic/wiki/%E8%BF%9C%E7%A8%8B%E9%83%A8%E7%BD%B2%E7%9A%84%E6%8C%87%E5%AF%BC)

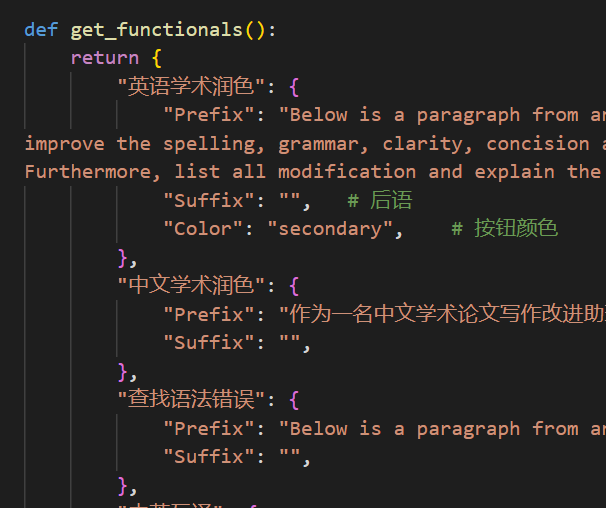

## 自定义新的便捷按钮(学术快捷键自定义)

@@ -204,7 +189,7 @@ proxies = { "http": ip_proxy + ":51837", "https": ip_proxy + ":51837", } # 请

如果你发明了更好用的学术快捷键,欢迎发issue或者pull requests!

## 配置代理

-

+### 方法一:常规方法

在```config.py```中修改端口与代理软件对应

@@ -216,6 +201,8 @@ proxies = { "http": ip_proxy + ":51837", "https": ip_proxy + ":51837", } # 请

```

python check_proxy.py

```

+### 方法二:纯新手教程

+[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

## 兼容性测试

@@ -259,13 +246,44 @@ python check_proxy.py

### 模块化功能设计

-## Todo:

-- (Top Priority) 调用另一个开源项目text-generation-webui的web接口,使用其他llm模型

-- 总结大工程源代码时,文本过长、token溢出的问题(目前的方法是直接二分丢弃处理溢出,过于粗暴,有效信息大量丢失)

-- UI不够美观

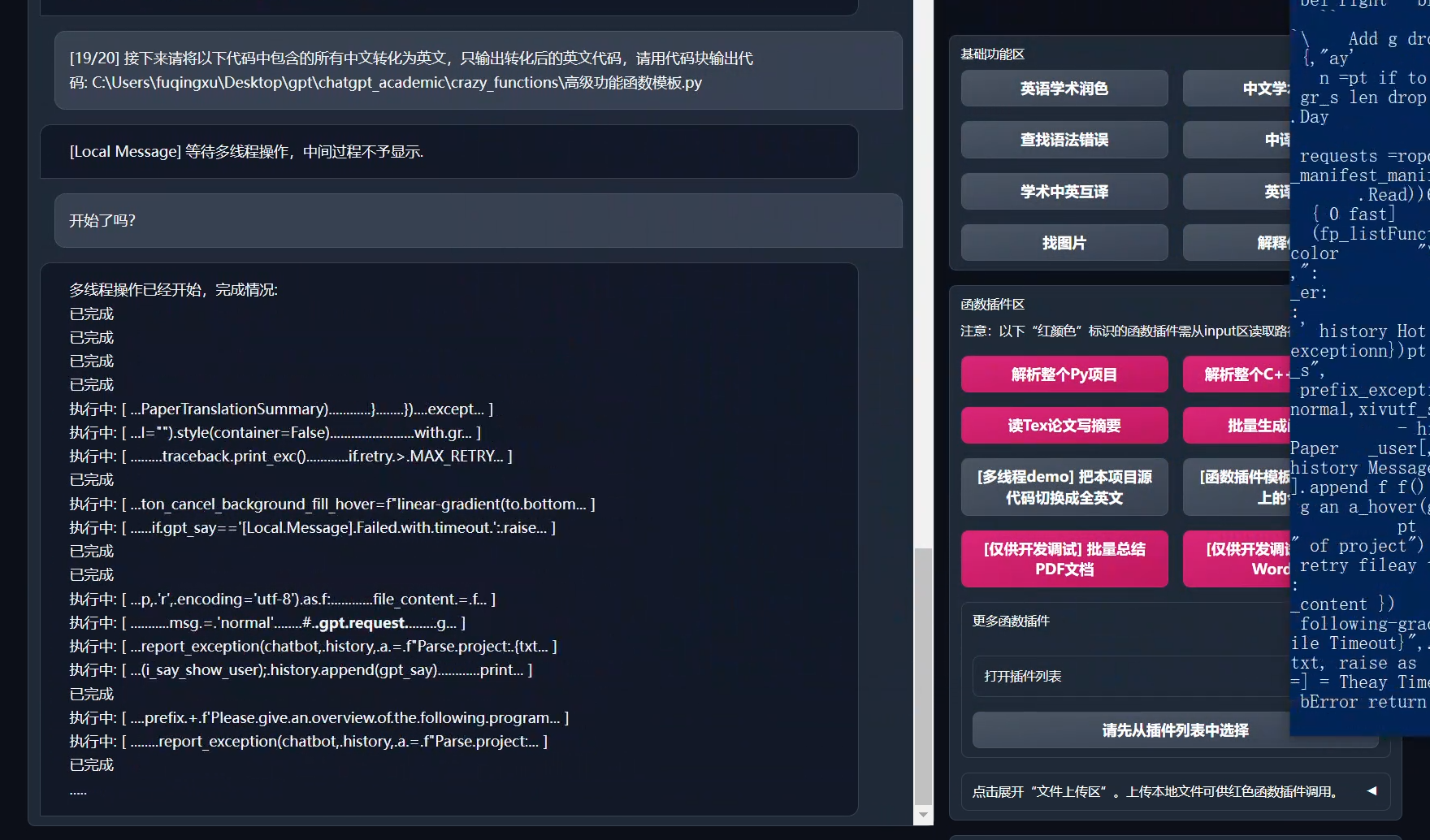

+### 源代码转译英文

+

+

+

+

+## Todo 与 版本规划:

+

+- version 3 (Todo):

+- - 支持gpt4和其他更多llm

+- version 2.4+ (Todo):

+- - 总结大工程源代码时文本过长、token溢出的问题

+- - 实现项目打包部署

+- - 函数插件参数接口优化

+- - 自更新

+- version 2.4: (1)新增PDF全文翻译功能; (2)新增输入区切换位置的功能; (3)新增垂直布局选项; (4)多线程函数插件优化。

+- version 2.3: 增强多线程交互性

+- version 2.2: 函数插件支持热重载

+- version 2.1: 可折叠式布局

+- version 2.0: 引入模块化函数插件

+- version 1.0: 基础功能

+

+## 参考与学习

+

+

+```

+代码中参考了很多其他优秀项目中的设计,主要包括:

+

+# 借鉴项目1:借鉴了ChuanhuChatGPT中读取OpenAI json的方法、记录历史问询记录的方法以及gradio queue的使用技巧

+https://github.com/GaiZhenbiao/ChuanhuChatGPT

+

+# 借鉴项目2:借鉴了mdtex2html中公式处理的方法

+https://github.com/polarwinkel/mdtex2html

+

+

+```

diff --git a/check_proxy.py b/check_proxy.py

index a6919dd..95a439e 100644

--- a/check_proxy.py

+++ b/check_proxy.py

@@ -3,7 +3,8 @@ def check_proxy(proxies):

import requests

proxies_https = proxies['https'] if proxies is not None else '无'

try:

- response = requests.get("https://ipapi.co/json/", proxies=proxies, timeout=4)

+ response = requests.get("https://ipapi.co/json/",

+ proxies=proxies, timeout=4)

data = response.json()

print(f'查询代理的地理位置,返回的结果是{data}')

if 'country_name' in data:

@@ -19,9 +20,36 @@ def check_proxy(proxies):

return result

+def auto_update():

+ from toolbox import get_conf

+ import requests

+ import time

+ import json

+ proxies, = get_conf('proxies')

+ response = requests.get("https://raw.githubusercontent.com/binary-husky/chatgpt_academic/master/version",

+ proxies=proxies, timeout=1)

+ remote_json_data = json.loads(response.text)

+ remote_version = remote_json_data['version']

+ if remote_json_data["show_feature"]:

+ new_feature = "新功能:" + remote_json_data["new_feature"]

+ else:

+ new_feature = ""

+ with open('./version', 'r', encoding='utf8') as f:

+ current_version = f.read()

+ current_version = json.loads(current_version)['version']

+ if (remote_version - current_version) >= 0.05:

+ print(

+ f'\n新版本可用。新版本:{remote_version},当前版本:{current_version}。{new_feature}')

+ print('Github更新地址:\nhttps://github.com/binary-husky/chatgpt_academic\n')

+ time.sleep(3)

+ return

+ else:

+ return

+

+

if __name__ == '__main__':

- import os; os.environ['no_proxy'] = '*' # 避免代理网络产生意外污染

+ import os

+ os.environ['no_proxy'] = '*' # 避免代理网络产生意外污染

from toolbox import get_conf

proxies, = get_conf('proxies')

check_proxy(proxies)

-

\ No newline at end of file

diff --git a/config.py b/config.py

index 7fc73db..f94f183 100644

--- a/config.py

+++ b/config.py

@@ -1,23 +1,31 @@

-# API_KEY = "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" 此key无效

+# [step 1]>> 例如: API_KEY = "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" (此key无效)

API_KEY = "sk-此处填API密钥"

-API_URL = "https://api.openai.com/v1/chat/completions"

-# 改为True应用代理

+# [step 2]>> 改为True应用代理,如果直接在海外服务器部署,此处不修改

USE_PROXY = False

if USE_PROXY:

-

- # 填写格式是 [协议]:// [地址] :[端口] ,

+ # 填写格式是 [协议]:// [地址] :[端口],填写之前不要忘记把USE_PROXY改成True,如果直接在海外服务器部署,此处不修改

# 例如 "socks5h://localhost:11284"

- # [协议] 常见协议无非socks5h/http,例如 v2*** 和 s** 的默认本地协议是socks5h,cl**h 的默认本地协议是http

+ # [协议] 常见协议无非socks5h/http; 例如 v2**y 和 ss* 的默认本地协议是socks5h; 而cl**h 的默认本地协议是http

# [地址] 懂的都懂,不懂就填localhost或者127.0.0.1肯定错不了(localhost意思是代理软件安装在本机上)

- # [端口] 在代理软件的设置里,不同的代理软件界面不一样,但端口号都应该在最显眼的位置上

+ # [端口] 在代理软件的设置里找。虽然不同的代理软件界面不一样,但端口号都应该在最显眼的位置上

# 代理网络的地址,打开你的科学上网软件查看代理的协议(socks5/http)、地址(localhost)和端口(11284)

- proxies = { "http": "socks5h://localhost:11284", "https": "socks5h://localhost:11284", }

- print('网络代理状态:运行。')

+ proxies = {

+ # [协议]:// [地址] :[端口]

+ "http": "socks5h://localhost:11284",

+ "https": "socks5h://localhost:11284",

+ }

else:

proxies = None

- print('网络代理状态:未配置。无代理状态下很可能无法访问。')

+

+

+# [step 3]>> 以下配置可以优化体验,但大部分场合下并不需要修改

+# 对话窗的高度

+CHATBOT_HEIGHT = 1115

+

+# 窗口布局

+LAYOUT = "LEFT-RIGHT" # "LEFT-RIGHT"(左右布局) # "TOP-DOWN"(上下布局)

# 发送请求到OpenAI后,等待多久判定为超时

TIMEOUT_SECONDS = 25

@@ -28,11 +36,15 @@ WEB_PORT = -1

# 如果OpenAI不响应(网络卡顿、代理失败、KEY失效),重试的次数限制

MAX_RETRY = 2

-# 选择的OpenAI模型是(gpt4现在只对申请成功的人开放)

+# OpenAI模型选择是(gpt4现在只对申请成功的人开放)

LLM_MODEL = "gpt-3.5-turbo"

+# OpenAI的API_URL

+API_URL = "https://api.openai.com/v1/chat/completions"

+

# 设置并行使用的线程数

CONCURRENT_COUNT = 100

-# 设置用户名和密码

-AUTHENTICATION = [] # [("username", "password"), ("username2", "password2"), ...]

+# 设置用户名和密码(相关功能不稳定,与gradio版本和网络都相关,如果本地使用不建议加这个)

+# [("username", "password"), ("username2", "password2"), ...]

+AUTHENTICATION = []

diff --git a/functional.py b/core_functional.py

similarity index 56%

rename from functional.py

rename to core_functional.py

index 2ed1507..722abc1 100644

--- a/functional.py

+++ b/core_functional.py

@@ -4,29 +4,38 @@

# 默认按钮颜色是 secondary

from toolbox import clear_line_break

-def get_functionals():

+

+def get_core_functions():

return {

"英语学术润色": {

# 前言

"Prefix": r"Below is a paragraph from an academic paper. Polish the writing to meet the academic style, " +

- r"improve the spelling, grammar, clarity, concision and overall readability. When neccessary, rewrite the whole sentence. " +

+ r"improve the spelling, grammar, clarity, concision and overall readability. When necessary, rewrite the whole sentence. " +

r"Furthermore, list all modification and explain the reasons to do so in markdown table." + "\n\n",

- # 后语

+ # 后语

"Suffix": r"",

"Color": r"secondary", # 按钮颜色

},

"中文学术润色": {

- "Prefix": r"作为一名中文学术论文写作改进助理,你的任务是改进所提供文本的拼写、语法、清晰、简洁和整体可读性," +

+ "Prefix": r"作为一名中文学术论文写作改进助理,你的任务是改进所提供文本的拼写、语法、清晰、简洁和整体可读性," +

r"同时分解长句,减少重复,并提供改进建议。请只提供文本的更正版本,避免包括解释。请编辑以下文本" + "\n\n",

"Suffix": r"",

},

"查找语法错误": {

- "Prefix": r"Below is a paragraph from an academic paper. " +

- r"Can you help me ensure that the grammar and the spelling is correct? " +

- r"Do not try to polish the text, if no mistake is found, tell me that this paragraph is good." +

- r"If you find grammar or spelling mistakes, please list mistakes you find in a two-column markdown table, " +

+ "Prefix": r"Can you help me ensure that the grammar and the spelling is correct? " +

+ r"Do not try to polish the text, if no mistake is found, tell me that this paragraph is good." +

+ r"If you find grammar or spelling mistakes, please list mistakes you find in a two-column markdown table, " +

r"put the original text the first column, " +

- r"put the corrected text in the second column and highlight the key words you fixed." + "\n\n",

+ r"put the corrected text in the second column and highlight the key words you fixed.""\n"

+ r"Example:""\n"

+ r"Paragraph: How is you? Do you knows what is it?""\n"

+ r"| Original sentence | Corrected sentence |""\n"

+ r"| :--- | :--- |""\n"

+ r"| How **is** you? | How **are** you? |""\n"

+ r"| Do you **knows** what **is** **it**? | Do you **know** what **it** **is** ? |""\n"

+ r"Below is a paragraph from an academic paper. "

+ r"You need to report all grammar and spelling mistakes as the example before."

+ + "\n\n",

"Suffix": r"",

"PreProcess": clear_line_break, # 预处理:清除换行符

},

@@ -34,9 +43,17 @@ def get_functionals():

"Prefix": r"Please translate following sentence to English:" + "\n\n",

"Suffix": r"",

},

- "学术中译英": {

- "Prefix": r"Please translate following sentence to English with academic writing, and provide some related authoritative examples:" + "\n\n",

- "Suffix": r"",

+ "学术中英互译": {

+ "Prefix": r"I want you to act as a scientific English-Chinese translator, " +

+ r"I will provide you with some paragraphs in one language " +

+ r"and your task is to accurately and academically translate the paragraphs only into the other language. " +

+ r"Do not repeat the original provided paragraphs after translation. " +

+ r"You should use artificial intelligence tools, " +

+ r"such as natural language processing, and rhetorical knowledge " +

+ r"and experience about effective writing techniques to reply. " +

+ r"I'll give you my paragraphs as follows, tell me what language it is written in, and then translate:" + "\n\n",

+ "Suffix": "",

+ "Color": "secondary",

},

"英译中": {

"Prefix": r"请翻译成中文:" + "\n\n",

diff --git a/crazy_functional.py b/crazy_functional.py

new file mode 100644

index 0000000..3e53f54

--- /dev/null

+++ b/crazy_functional.py

@@ -0,0 +1,115 @@

+from toolbox import HotReload # HotReload 的意思是热更新,修改函数插件后,不需要重启程序,代码直接生效

+

+

+def get_crazy_functions():

+ ###################### 第一组插件 ###########################

+ # [第一组插件]: 最早期编写的项目插件和一些demo

+ from crazy_functions.读文章写摘要 import 读文章写摘要

+ from crazy_functions.生成函数注释 import 批量生成函数注释

+ from crazy_functions.解析项目源代码 import 解析项目本身

+ from crazy_functions.解析项目源代码 import 解析一个Python项目

+ from crazy_functions.解析项目源代码 import 解析一个C项目的头文件

+ from crazy_functions.解析项目源代码 import 解析一个C项目

+ from crazy_functions.解析项目源代码 import 解析一个Golang项目

+ from crazy_functions.解析项目源代码 import 解析一个Java项目

+ from crazy_functions.解析项目源代码 import 解析一个Rect项目

+ from crazy_functions.高级功能函数模板 import 高阶功能模板函数

+ from crazy_functions.代码重写为全英文_多线程 import 全项目切换英文

+

+ function_plugins = {

+ "请解析并解构此项目本身(源码自译解)": {

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(解析项目本身)

+ },

+ "解析整个Python项目": {

+ "Color": "stop", # 按钮颜色

+ "Function": HotReload(解析一个Python项目)

+ },

+ "解析整个C++项目头文件": {

+ "Color": "stop", # 按钮颜色

+ "Function": HotReload(解析一个C项目的头文件)

+ },

+ "解析整个C++项目(.cpp/.h)": {

+ "Color": "stop", # 按钮颜色

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(解析一个C项目)

+ },

+ "解析整个Go项目": {

+ "Color": "stop", # 按钮颜色

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(解析一个Golang项目)

+ },

+ "解析整个Java项目": {

+ "Color": "stop", # 按钮颜色

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(解析一个Java项目)

+ },

+ "解析整个React项目": {

+ "Color": "stop", # 按钮颜色

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(解析一个Rect项目)

+ },

+ "读Tex论文写摘要": {

+ "Color": "stop", # 按钮颜色

+ "Function": HotReload(读文章写摘要)

+ },

+ "批量生成函数注释": {

+ "Color": "stop", # 按钮颜色

+ "Function": HotReload(批量生成函数注释)

+ },

+ "[多线程demo] 把本项目源代码切换成全英文": {

+ # HotReload 的意思是热更新,修改函数插件代码后,不需要重启程序,代码直接生效

+ "Function": HotReload(全项目切换英文)

+ },

+ "[函数插件模板demo] 历史上的今天": {

+ # HotReload 的意思是热更新,修改函数插件代码后,不需要重启程序,代码直接生效

+ "Function": HotReload(高阶功能模板函数)

+ },

+ }

+ ###################### 第二组插件 ###########################

+ # [第二组插件]: 经过充分测试,但功能上距离达到完美状态还差一点点

+ from crazy_functions.批量总结PDF文档 import 批量总结PDF文档

+ from crazy_functions.批量总结PDF文档pdfminer import 批量总结PDF文档pdfminer

+ from crazy_functions.总结word文档 import 总结word文档

+ from crazy_functions.批量翻译PDF文档_多线程 import 批量翻译PDF文档

+

+ function_plugins.update({

+ "批量翻译PDF文档(多线程)": {

+ "Color": "stop",

+ "AsButton": True, # 加入下拉菜单中

+ "Function": HotReload(批量翻译PDF文档)

+ },

+ "[仅供开发调试] 批量总结PDF文档": {

+ "Color": "stop",

+ "AsButton": False, # 加入下拉菜单中

+ # HotReload 的意思是热更新,修改函数插件代码后,不需要重启程序,代码直接生效

+ "Function": HotReload(批量总结PDF文档)

+ },

+ "[仅供开发调试] 批量总结PDF文档pdfminer": {

+ "Color": "stop",

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(批量总结PDF文档pdfminer)

+ },

+ "批量总结Word文档": {

+ "Color": "stop",

+ "Function": HotReload(总结word文档)

+ },

+ })

+

+ ###################### 第三组插件 ###########################

+ # [第三组插件]: 尚未充分测试的函数插件,放在这里

+ try:

+ from crazy_functions.下载arxiv论文翻译摘要 import 下载arxiv论文并翻译摘要

+ function_plugins.update({

+ "一键下载arxiv论文并翻译摘要(先在input输入编号,如1812.10695)": {

+ "Color": "stop",

+ "AsButton": False, # 加入下拉菜单中

+ "Function": HotReload(下载arxiv论文并翻译摘要)

+ }

+ })

+

+ except Exception as err:

+ print(f'[下载arxiv论文并翻译摘要] 插件导入失败 {str(err)}')

+

+ ###################### 第n组插件 ###########################

+ return function_plugins

diff --git a/crazy_functions/__init__.py b/crazy_functions/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/crazy_functions/crazy_utils.py b/crazy_functions/crazy_utils.py

new file mode 100644

index 0000000..bdd6e2b

--- /dev/null

+++ b/crazy_functions/crazy_utils.py

@@ -0,0 +1,153 @@

+

+

+def request_gpt_model_in_new_thread_with_ui_alive(inputs, inputs_show_user, top_p, temperature, chatbot, history, sys_prompt, refresh_interval=0.2):

+ import time

+ from concurrent.futures import ThreadPoolExecutor

+ from request_llm.bridge_chatgpt import predict_no_ui_long_connection

+ # 用户反馈

+ chatbot.append([inputs_show_user, ""])

+ msg = '正常'

+ yield chatbot, [], msg

+ executor = ThreadPoolExecutor(max_workers=16)

+ mutable = ["", time.time()]

+ future = executor.submit(lambda:

+ predict_no_ui_long_connection(

+ inputs=inputs, top_p=top_p, temperature=temperature, history=history, sys_prompt=sys_prompt, observe_window=mutable)

+ )

+ while True:

+ # yield一次以刷新前端页面

+ time.sleep(refresh_interval)

+ # “喂狗”(看门狗)

+ mutable[1] = time.time()

+ if future.done():

+ break

+ chatbot[-1] = [chatbot[-1][0], mutable[0]]

+ msg = "正常"

+ yield chatbot, [], msg

+ return future.result()

+

+

+def request_gpt_model_multi_threads_with_very_awesome_ui_and_high_efficiency(inputs_array, inputs_show_user_array, top_p, temperature, chatbot, history_array, sys_prompt_array, refresh_interval=0.2, max_workers=10, scroller_max_len=30):

+ import time

+ from concurrent.futures import ThreadPoolExecutor

+ from request_llm.bridge_chatgpt import predict_no_ui_long_connection

+ assert len(inputs_array) == len(history_array)

+ assert len(inputs_array) == len(sys_prompt_array)

+ executor = ThreadPoolExecutor(max_workers=max_workers)

+ n_frag = len(inputs_array)

+ # 用户反馈

+ chatbot.append(["请开始多线程操作。", ""])

+ msg = '正常'

+ yield chatbot, [], msg

+ # 异步原子

+ mutable = [["", time.time()] for _ in range(n_frag)]

+

+ def _req_gpt(index, inputs, history, sys_prompt):

+ gpt_say = predict_no_ui_long_connection(

+ inputs=inputs, top_p=top_p, temperature=temperature, history=history, sys_prompt=sys_prompt, observe_window=mutable[

+ index]

+ )

+ return gpt_say

+ # 异步任务开始

+ futures = [executor.submit(_req_gpt, index, inputs, history, sys_prompt) for index, inputs, history, sys_prompt in zip(

+ range(len(inputs_array)), inputs_array, history_array, sys_prompt_array)]

+ cnt = 0

+ while True:

+ # yield一次以刷新前端页面

+ time.sleep(refresh_interval)

+ cnt += 1

+ worker_done = [h.done() for h in futures]

+ if all(worker_done):

+ executor.shutdown()

+ break

+ # 更好的UI视觉效果

+ observe_win = []

+ # 每个线程都要“喂狗”(看门狗)

+ for thread_index, _ in enumerate(worker_done):

+ mutable[thread_index][1] = time.time()

+ # 在前端打印些好玩的东西

+ for thread_index, _ in enumerate(worker_done):

+ print_something_really_funny = "[ ...`"+mutable[thread_index][0][-scroller_max_len:].\

+ replace('\n', '').replace('```', '...').replace(

+ ' ', '.').replace('

', '.....').replace('$', '.')+"`... ]"

+ observe_win.append(print_something_really_funny)

+ stat_str = ''.join([f'执行中: {obs}\n\n' if not done else '已完成\n\n' for done, obs in zip(

+ worker_done, observe_win)])

+ chatbot[-1] = [chatbot[-1][0],

+ f'多线程操作已经开始,完成情况: \n\n{stat_str}' + ''.join(['.']*(cnt % 10+1))]

+ msg = "正常"

+ yield chatbot, [], msg

+ # 异步任务结束

+ gpt_response_collection = []

+ for inputs_show_user, f in zip(inputs_show_user_array, futures):

+ gpt_res = f.result()

+ gpt_response_collection.extend([inputs_show_user, gpt_res])

+ return gpt_response_collection

+

+

+def breakdown_txt_to_satisfy_token_limit(txt, get_token_fn, limit):

+ def cut(txt_tocut, must_break_at_empty_line): # 递归

+ if get_token_fn(txt_tocut) <= limit:

+ return [txt_tocut]

+ else:

+ lines = txt_tocut.split('\n')

+ estimated_line_cut = limit / get_token_fn(txt_tocut) * len(lines)

+ estimated_line_cut = int(estimated_line_cut)

+ for cnt in reversed(range(estimated_line_cut)):

+ if must_break_at_empty_line:

+ if lines[cnt] != "":

+ continue

+ print(cnt)

+ prev = "\n".join(lines[:cnt])

+ post = "\n".join(lines[cnt:])

+ if get_token_fn(prev) < limit:

+ break

+ if cnt == 0:

+ print('what the fuck ?')

+ raise RuntimeError("存在一行极长的文本!")

+ # print(len(post))

+ # 列表递归接龙

+ result = [prev]

+ result.extend(cut(post, must_break_at_empty_line))

+ return result

+ try:

+ return cut(txt, must_break_at_empty_line=True)

+ except RuntimeError:

+ return cut(txt, must_break_at_empty_line=False)

+

+

+def breakdown_txt_to_satisfy_token_limit_for_pdf(txt, get_token_fn, limit):

+ def cut(txt_tocut, must_break_at_empty_line): # 递归

+ if get_token_fn(txt_tocut) <= limit:

+ return [txt_tocut]

+ else:

+ lines = txt_tocut.split('\n')

+ estimated_line_cut = limit / get_token_fn(txt_tocut) * len(lines)

+ estimated_line_cut = int(estimated_line_cut)

+ cnt = 0

+ for cnt in reversed(range(estimated_line_cut)):

+ if must_break_at_empty_line:

+ if lines[cnt] != "":

+ continue

+ print(cnt)

+ prev = "\n".join(lines[:cnt])

+ post = "\n".join(lines[cnt:])

+ if get_token_fn(prev) < limit:

+ break

+ if cnt == 0:

+ # print('what the fuck ? 存在一行极长的文本!')

+ raise RuntimeError("存在一行极长的文本!")

+ # print(len(post))

+ # 列表递归接龙

+ result = [prev]

+ result.extend(cut(post, must_break_at_empty_line))

+ return result

+ try:

+ return cut(txt, must_break_at_empty_line=True)

+ except RuntimeError:

+ try:

+ return cut(txt, must_break_at_empty_line=False)

+ except RuntimeError:

+ # 这个中文的句号是故意的,作为一个标识而存在

+ res = cut(txt.replace('.', '。\n'), must_break_at_empty_line=False)

+ return [r.replace('。\n', '.') for r in res]

diff --git a/crazy_functions/test_project/cpp/longcode/jpgd.cpp b/crazy_functions/test_project/cpp/longcode/jpgd.cpp

new file mode 100644

index 0000000..36d06c8

--- /dev/null

+++ b/crazy_functions/test_project/cpp/longcode/jpgd.cpp

@@ -0,0 +1,3276 @@

+// jpgd.cpp - C++ class for JPEG decompression.

+// Public domain, Rich Geldreich

+// Last updated Apr. 16, 2011

+// Alex Evans: Linear memory allocator (taken from jpge.h).

+//

+// Supports progressive and baseline sequential JPEG image files, and the most common chroma subsampling factors: Y, H1V1, H2V1, H1V2, and H2V2.

+//

+// Chroma upsampling quality: H2V2 is upsampled in the frequency domain, H2V1 and H1V2 are upsampled using point sampling.

+// Chroma upsampling reference: "Fast Scheme for Image Size Change in the Compressed Domain"

+// http://vision.ai.uiuc.edu/~dugad/research/dct/index.html

+

+#include "jpgd.h"

+#include

+

+#include

+// BEGIN EPIC MOD

+#define JPGD_ASSERT(x) { assert(x); CA_ASSUME(x); } (void)0

+// END EPIC MOD

+

+#ifdef _MSC_VER

+#pragma warning (disable : 4611) // warning C4611: interaction between '_setjmp' and C++ object destruction is non-portable

+#endif

+

+// Set to 1 to enable freq. domain chroma upsampling on images using H2V2 subsampling (0=faster nearest neighbor sampling).

+// This is slower, but results in higher quality on images with highly saturated colors.

+#define JPGD_SUPPORT_FREQ_DOMAIN_UPSAMPLING 1

+

+#define JPGD_TRUE (1)

+#define JPGD_FALSE (0)

+

+#define JPGD_MAX(a,b) (((a)>(b)) ? (a) : (b))

+#define JPGD_MIN(a,b) (((a)<(b)) ? (a) : (b))

+

+namespace jpgd {

+

+ static inline void *jpgd_malloc(size_t nSize) { return FMemory::Malloc(nSize); }

+ static inline void jpgd_free(void *p) { FMemory::Free(p); }

+

+// BEGIN EPIC MOD

+//@UE3 - use UE3 BGRA encoding instead of assuming RGBA

+ // stolen from IImageWrapper.h

+ enum ERGBFormatJPG

+ {

+ Invalid = -1,

+ RGBA = 0,

+ BGRA = 1,

+ Gray = 2,

+ };

+ static ERGBFormatJPG jpg_format;

+// END EPIC MOD

+

+ // DCT coefficients are stored in this sequence.

+ static int g_ZAG[64] = { 0,1,8,16,9,2,3,10,17,24,32,25,18,11,4,5,12,19,26,33,40,48,41,34,27,20,13,6,7,14,21,28,35,42,49,56,57,50,43,36,29,22,15,23,30,37,44,51,58,59,52,45,38,31,39,46,53,60,61,54,47,55,62,63 };

+

+ enum JPEG_MARKER

+ {

+ M_SOF0 = 0xC0, M_SOF1 = 0xC1, M_SOF2 = 0xC2, M_SOF3 = 0xC3, M_SOF5 = 0xC5, M_SOF6 = 0xC6, M_SOF7 = 0xC7, M_JPG = 0xC8,

+ M_SOF9 = 0xC9, M_SOF10 = 0xCA, M_SOF11 = 0xCB, M_SOF13 = 0xCD, M_SOF14 = 0xCE, M_SOF15 = 0xCF, M_DHT = 0xC4, M_DAC = 0xCC,

+ M_RST0 = 0xD0, M_RST1 = 0xD1, M_RST2 = 0xD2, M_RST3 = 0xD3, M_RST4 = 0xD4, M_RST5 = 0xD5, M_RST6 = 0xD6, M_RST7 = 0xD7,

+ M_SOI = 0xD8, M_EOI = 0xD9, M_SOS = 0xDA, M_DQT = 0xDB, M_DNL = 0xDC, M_DRI = 0xDD, M_DHP = 0xDE, M_EXP = 0xDF,

+ M_APP0 = 0xE0, M_APP15 = 0xEF, M_JPG0 = 0xF0, M_JPG13 = 0xFD, M_COM = 0xFE, M_TEM = 0x01, M_ERROR = 0x100, RST0 = 0xD0

+ };

+

+ enum JPEG_SUBSAMPLING { JPGD_GRAYSCALE = 0, JPGD_YH1V1, JPGD_YH2V1, JPGD_YH1V2, JPGD_YH2V2 };

+

+#define CONST_BITS 13

+#define PASS1_BITS 2

+#define SCALEDONE ((int32)1)

+

+#define FIX_0_298631336 ((int32)2446) /* FIX(0.298631336) */

+#define FIX_0_390180644 ((int32)3196) /* FIX(0.390180644) */

+#define FIX_0_541196100 ((int32)4433) /* FIX(0.541196100) */

+#define FIX_0_765366865 ((int32)6270) /* FIX(0.765366865) */

+#define FIX_0_899976223 ((int32)7373) /* FIX(0.899976223) */

+#define FIX_1_175875602 ((int32)9633) /* FIX(1.175875602) */

+#define FIX_1_501321110 ((int32)12299) /* FIX(1.501321110) */

+#define FIX_1_847759065 ((int32)15137) /* FIX(1.847759065) */

+#define FIX_1_961570560 ((int32)16069) /* FIX(1.961570560) */

+#define FIX_2_053119869 ((int32)16819) /* FIX(2.053119869) */

+#define FIX_2_562915447 ((int32)20995) /* FIX(2.562915447) */

+#define FIX_3_072711026 ((int32)25172) /* FIX(3.072711026) */

+

+#define DESCALE(x,n) (((x) + (SCALEDONE << ((n)-1))) >> (n))

+#define DESCALE_ZEROSHIFT(x,n) (((x) + (128 << (n)) + (SCALEDONE << ((n)-1))) >> (n))

+

+#define MULTIPLY(var, cnst) ((var) * (cnst))

+

+#define CLAMP(i) ((static_cast(i) > 255) ? (((~i) >> 31) & 0xFF) : (i))

+

+ // Compiler creates a fast path 1D IDCT for X non-zero columns

+ template

+ struct Row

+ {

+ static void idct(int* pTemp, const jpgd_block_t* pSrc)

+ {

+ // ACCESS_COL() will be optimized at compile time to either an array access, or 0.

+#define ACCESS_COL(x) (((x) < NONZERO_COLS) ? (int)pSrc[x] : 0)

+

+ const int z2 = ACCESS_COL(2), z3 = ACCESS_COL(6);

+

+ const int z1 = MULTIPLY(z2 + z3, FIX_0_541196100);

+ const int tmp2 = z1 + MULTIPLY(z3, - FIX_1_847759065);

+ const int tmp3 = z1 + MULTIPLY(z2, FIX_0_765366865);

+

+ const int tmp0 = (ACCESS_COL(0) + ACCESS_COL(4)) << CONST_BITS;

+ const int tmp1 = (ACCESS_COL(0) - ACCESS_COL(4)) << CONST_BITS;

+

+ const int tmp10 = tmp0 + tmp3, tmp13 = tmp0 - tmp3, tmp11 = tmp1 + tmp2, tmp12 = tmp1 - tmp2;

+

+ const int atmp0 = ACCESS_COL(7), atmp1 = ACCESS_COL(5), atmp2 = ACCESS_COL(3), atmp3 = ACCESS_COL(1);

+

+ const int bz1 = atmp0 + atmp3, bz2 = atmp1 + atmp2, bz3 = atmp0 + atmp2, bz4 = atmp1 + atmp3;

+ const int bz5 = MULTIPLY(bz3 + bz4, FIX_1_175875602);

+

+ const int az1 = MULTIPLY(bz1, - FIX_0_899976223);

+ const int az2 = MULTIPLY(bz2, - FIX_2_562915447);

+ const int az3 = MULTIPLY(bz3, - FIX_1_961570560) + bz5;

+ const int az4 = MULTIPLY(bz4, - FIX_0_390180644) + bz5;

+

+ const int btmp0 = MULTIPLY(atmp0, FIX_0_298631336) + az1 + az3;

+ const int btmp1 = MULTIPLY(atmp1, FIX_2_053119869) + az2 + az4;

+ const int btmp2 = MULTIPLY(atmp2, FIX_3_072711026) + az2 + az3;

+ const int btmp3 = MULTIPLY(atmp3, FIX_1_501321110) + az1 + az4;

+

+ pTemp[0] = DESCALE(tmp10 + btmp3, CONST_BITS-PASS1_BITS);

+ pTemp[7] = DESCALE(tmp10 - btmp3, CONST_BITS-PASS1_BITS);

+ pTemp[1] = DESCALE(tmp11 + btmp2, CONST_BITS-PASS1_BITS);

+ pTemp[6] = DESCALE(tmp11 - btmp2, CONST_BITS-PASS1_BITS);

+ pTemp[2] = DESCALE(tmp12 + btmp1, CONST_BITS-PASS1_BITS);

+ pTemp[5] = DESCALE(tmp12 - btmp1, CONST_BITS-PASS1_BITS);

+ pTemp[3] = DESCALE(tmp13 + btmp0, CONST_BITS-PASS1_BITS);

+ pTemp[4] = DESCALE(tmp13 - btmp0, CONST_BITS-PASS1_BITS);

+ }

+ };

+

+ template <>

+ struct Row<0>

+ {

+ static void idct(int* pTemp, const jpgd_block_t* pSrc)

+ {

+#ifdef _MSC_VER

+ pTemp; pSrc;

+#endif

+ }

+ };

+

+ template <>

+ struct Row<1>

+ {

+ static void idct(int* pTemp, const jpgd_block_t* pSrc)

+ {

+ const int dcval = (pSrc[0] << PASS1_BITS);

+

+ pTemp[0] = dcval;

+ pTemp[1] = dcval;

+ pTemp[2] = dcval;

+ pTemp[3] = dcval;

+ pTemp[4] = dcval;

+ pTemp[5] = dcval;

+ pTemp[6] = dcval;

+ pTemp[7] = dcval;

+ }

+ };

+

+ // Compiler creates a fast path 1D IDCT for X non-zero rows

+ template

+ struct Col

+ {

+ static void idct(uint8* pDst_ptr, const int* pTemp)

+ {

+ // ACCESS_ROW() will be optimized at compile time to either an array access, or 0.

+#define ACCESS_ROW(x) (((x) < NONZERO_ROWS) ? pTemp[x * 8] : 0)

+

+ const int z2 = ACCESS_ROW(2);

+ const int z3 = ACCESS_ROW(6);

+

+ const int z1 = MULTIPLY(z2 + z3, FIX_0_541196100);

+ const int tmp2 = z1 + MULTIPLY(z3, - FIX_1_847759065);

+ const int tmp3 = z1 + MULTIPLY(z2, FIX_0_765366865);

+

+ const int tmp0 = (ACCESS_ROW(0) + ACCESS_ROW(4)) << CONST_BITS;

+ const int tmp1 = (ACCESS_ROW(0) - ACCESS_ROW(4)) << CONST_BITS;

+

+ const int tmp10 = tmp0 + tmp3, tmp13 = tmp0 - tmp3, tmp11 = tmp1 + tmp2, tmp12 = tmp1 - tmp2;

+

+ const int atmp0 = ACCESS_ROW(7), atmp1 = ACCESS_ROW(5), atmp2 = ACCESS_ROW(3), atmp3 = ACCESS_ROW(1);

+

+ const int bz1 = atmp0 + atmp3, bz2 = atmp1 + atmp2, bz3 = atmp0 + atmp2, bz4 = atmp1 + atmp3;

+ const int bz5 = MULTIPLY(bz3 + bz4, FIX_1_175875602);

+

+ const int az1 = MULTIPLY(bz1, - FIX_0_899976223);

+ const int az2 = MULTIPLY(bz2, - FIX_2_562915447);

+ const int az3 = MULTIPLY(bz3, - FIX_1_961570560) + bz5;

+ const int az4 = MULTIPLY(bz4, - FIX_0_390180644) + bz5;

+

+ const int btmp0 = MULTIPLY(atmp0, FIX_0_298631336) + az1 + az3;

+ const int btmp1 = MULTIPLY(atmp1, FIX_2_053119869) + az2 + az4;

+ const int btmp2 = MULTIPLY(atmp2, FIX_3_072711026) + az2 + az3;

+ const int btmp3 = MULTIPLY(atmp3, FIX_1_501321110) + az1 + az4;

+

+ int i = DESCALE_ZEROSHIFT(tmp10 + btmp3, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*0] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp10 - btmp3, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*7] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp11 + btmp2, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*1] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp11 - btmp2, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*6] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp12 + btmp1, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*2] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp12 - btmp1, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*5] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp13 + btmp0, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*3] = (uint8)CLAMP(i);

+

+ i = DESCALE_ZEROSHIFT(tmp13 - btmp0, CONST_BITS+PASS1_BITS+3);

+ pDst_ptr[8*4] = (uint8)CLAMP(i);

+ }

+ };

+

+ template <>

+ struct Col<1>

+ {

+ static void idct(uint8* pDst_ptr, const int* pTemp)

+ {

+ int dcval = DESCALE_ZEROSHIFT(pTemp[0], PASS1_BITS+3);

+ const uint8 dcval_clamped = (uint8)CLAMP(dcval);

+ pDst_ptr[0*8] = dcval_clamped;

+ pDst_ptr[1*8] = dcval_clamped;

+ pDst_ptr[2*8] = dcval_clamped;

+ pDst_ptr[3*8] = dcval_clamped;

+ pDst_ptr[4*8] = dcval_clamped;

+ pDst_ptr[5*8] = dcval_clamped;

+ pDst_ptr[6*8] = dcval_clamped;

+ pDst_ptr[7*8] = dcval_clamped;

+ }

+ };

+

+ static const uint8 s_idct_row_table[] =

+ {

+ 1,0,0,0,0,0,0,0, 2,0,0,0,0,0,0,0, 2,1,0,0,0,0,0,0, 2,1,1,0,0,0,0,0, 2,2,1,0,0,0,0,0, 3,2,1,0,0,0,0,0, 4,2,1,0,0,0,0,0, 4,3,1,0,0,0,0,0,

+ 4,3,2,0,0,0,0,0, 4,3,2,1,0,0,0,0, 4,3,2,1,1,0,0,0, 4,3,2,2,1,0,0,0, 4,3,3,2,1,0,0,0, 4,4,3,2,1,0,0,0, 5,4,3,2,1,0,0,0, 6,4,3,2,1,0,0,0,

+ 6,5,3,2,1,0,0,0, 6,5,4,2,1,0,0,0, 6,5,4,3,1,0,0,0, 6,5,4,3,2,0,0,0, 6,5,4,3,2,1,0,0, 6,5,4,3,2,1,1,0, 6,5,4,3,2,2,1,0, 6,5,4,3,3,2,1,0,

+ 6,5,4,4,3,2,1,0, 6,5,5,4,3,2,1,0, 6,6,5,4,3,2,1,0, 7,6,5,4,3,2,1,0, 8,6,5,4,3,2,1,0, 8,7,5,4,3,2,1,0, 8,7,6,4,3,2,1,0, 8,7,6,5,3,2,1,0,

+ 8,7,6,5,4,2,1,0, 8,7,6,5,4,3,1,0, 8,7,6,5,4,3,2,0, 8,7,6,5,4,3,2,1, 8,7,6,5,4,3,2,2, 8,7,6,5,4,3,3,2, 8,7,6,5,4,4,3,2, 8,7,6,5,5,4,3,2,

+ 8,7,6,6,5,4,3,2, 8,7,7,6,5,4,3,2, 8,8,7,6,5,4,3,2, 8,8,8,6,5,4,3,2, 8,8,8,7,5,4,3,2, 8,8,8,7,6,4,3,2, 8,8,8,7,6,5,3,2, 8,8,8,7,6,5,4,2,

+ 8,8,8,7,6,5,4,3, 8,8,8,7,6,5,4,4, 8,8,8,7,6,5,5,4, 8,8,8,7,6,6,5,4, 8,8,8,7,7,6,5,4, 8,8,8,8,7,6,5,4, 8,8,8,8,8,6,5,4, 8,8,8,8,8,7,5,4,

+ 8,8,8,8,8,7,6,4, 8,8,8,8,8,7,6,5, 8,8,8,8,8,7,6,6, 8,8,8,8,8,7,7,6, 8,8,8,8,8,8,7,6, 8,8,8,8,8,8,8,6, 8,8,8,8,8,8,8,7, 8,8,8,8,8,8,8,8,

+ };

+

+ static const uint8 s_idct_col_table[] = { 1, 1, 2, 3, 3, 3, 3, 3, 3, 4, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 6, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8 };

+

+ void idct(const jpgd_block_t* pSrc_ptr, uint8* pDst_ptr, int block_max_zag)

+ {

+ JPGD_ASSERT(block_max_zag >= 1);

+ JPGD_ASSERT(block_max_zag <= 64);

+

+ if (block_max_zag == 1)

+ {

+ int k = ((pSrc_ptr[0] + 4) >> 3) + 128;

+ k = CLAMP(k);

+ k = k | (k<<8);

+ k = k | (k<<16);

+

+ for (int i = 8; i > 0; i--)

+ {

+ *(int*)&pDst_ptr[0] = k;

+ *(int*)&pDst_ptr[4] = k;

+ pDst_ptr += 8;

+ }

+ return;

+ }

+

+ int temp[64];

+

+ const jpgd_block_t* pSrc = pSrc_ptr;

+ int* pTemp = temp;

+

+ const uint8* pRow_tab = &s_idct_row_table[(block_max_zag - 1) * 8];

+ int i;

+ for (i = 8; i > 0; i--, pRow_tab++)

+ {

+ switch (*pRow_tab)

+ {

+ case 0: Row<0>::idct(pTemp, pSrc); break;

+ case 1: Row<1>::idct(pTemp, pSrc); break;

+ case 2: Row<2>::idct(pTemp, pSrc); break;

+ case 3: Row<3>::idct(pTemp, pSrc); break;

+ case 4: Row<4>::idct(pTemp, pSrc); break;

+ case 5: Row<5>::idct(pTemp, pSrc); break;

+ case 6: Row<6>::idct(pTemp, pSrc); break;

+ case 7: Row<7>::idct(pTemp, pSrc); break;

+ case 8: Row<8>::idct(pTemp, pSrc); break;

+ }

+

+ pSrc += 8;

+ pTemp += 8;

+ }

+

+ pTemp = temp;

+

+ const int nonzero_rows = s_idct_col_table[block_max_zag - 1];

+ for (i = 8; i > 0; i--)

+ {

+ switch (nonzero_rows)

+ {

+ case 1: Col<1>::idct(pDst_ptr, pTemp); break;

+ case 2: Col<2>::idct(pDst_ptr, pTemp); break;

+ case 3: Col<3>::idct(pDst_ptr, pTemp); break;

+ case 4: Col<4>::idct(pDst_ptr, pTemp); break;

+ case 5: Col<5>::idct(pDst_ptr, pTemp); break;

+ case 6: Col<6>::idct(pDst_ptr, pTemp); break;

+ case 7: Col<7>::idct(pDst_ptr, pTemp); break;

+ case 8: Col<8>::idct(pDst_ptr, pTemp); break;

+ }

+

+ pTemp++;

+ pDst_ptr++;

+ }

+ }

+

+ void idct_4x4(const jpgd_block_t* pSrc_ptr, uint8* pDst_ptr)

+ {

+ int temp[64];

+ int* pTemp = temp;

+ const jpgd_block_t* pSrc = pSrc_ptr;

+

+ for (int i = 4; i > 0; i--)

+ {

+ Row<4>::idct(pTemp, pSrc);

+ pSrc += 8;

+ pTemp += 8;

+ }

+

+ pTemp = temp;

+ for (int i = 8; i > 0; i--)

+ {

+ Col<4>::idct(pDst_ptr, pTemp);

+ pTemp++;

+ pDst_ptr++;

+ }

+ }

+

+ // Retrieve one character from the input stream.

+ inline uint jpeg_decoder::get_char()

+ {

+ // Any bytes remaining in buffer?

+ if (!m_in_buf_left)

+ {

+ // Try to get more bytes.

+ prep_in_buffer();

+ // Still nothing to get?

+ if (!m_in_buf_left)

+ {

+ // Pad the end of the stream with 0xFF 0xD9 (EOI marker)

+ int t = m_tem_flag;

+ m_tem_flag ^= 1;

+ if (t)

+ return 0xD9;

+ else

+ return 0xFF;

+ }

+ }

+

+ uint c = *m_pIn_buf_ofs++;

+ m_in_buf_left--;

+

+ return c;

+ }

+

+ // Same as previous method, except can indicate if the character is a pad character or not.

+ inline uint jpeg_decoder::get_char(bool *pPadding_flag)

+ {

+ if (!m_in_buf_left)

+ {

+ prep_in_buffer();

+ if (!m_in_buf_left)

+ {

+ *pPadding_flag = true;

+ int t = m_tem_flag;

+ m_tem_flag ^= 1;

+ if (t)

+ return 0xD9;

+ else

+ return 0xFF;

+ }

+ }

+

+ *pPadding_flag = false;

+

+ uint c = *m_pIn_buf_ofs++;

+ m_in_buf_left--;

+

+ return c;

+ }

+

+ // Inserts a previously retrieved character back into the input buffer.

+ inline void jpeg_decoder::stuff_char(uint8 q)

+ {

+ *(--m_pIn_buf_ofs) = q;

+ m_in_buf_left++;

+ }

+

+ // Retrieves one character from the input stream, but does not read past markers. Will continue to return 0xFF when a marker is encountered.

+ inline uint8 jpeg_decoder::get_octet()

+ {

+ bool padding_flag;

+ int c = get_char(&padding_flag);

+

+ if (c == 0xFF)

+ {

+ if (padding_flag)

+ return 0xFF;

+

+ c = get_char(&padding_flag);

+ if (padding_flag)

+ {

+ stuff_char(0xFF);

+ return 0xFF;

+ }

+

+ if (c == 0x00)

+ return 0xFF;

+ else

+ {

+ stuff_char(static_cast(c));

+ stuff_char(0xFF);

+ return 0xFF;

+ }

+ }

+

+ return static_cast(c);

+ }

+

+ // Retrieves a variable number of bits from the input stream. Does not recognize markers.

+ inline uint jpeg_decoder::get_bits(int num_bits)

+ {

+ if (!num_bits)

+ return 0;

+

+ uint i = m_bit_buf >> (32 - num_bits);

+

+ if ((m_bits_left -= num_bits) <= 0)

+ {

+ m_bit_buf <<= (num_bits += m_bits_left);

+

+ uint c1 = get_char();

+ uint c2 = get_char();

+ m_bit_buf = (m_bit_buf & 0xFFFF0000) | (c1 << 8) | c2;

+

+ m_bit_buf <<= -m_bits_left;

+

+ m_bits_left += 16;

+

+ JPGD_ASSERT(m_bits_left >= 0);

+ }

+ else

+ m_bit_buf <<= num_bits;

+

+ return i;

+ }

+

+ // Retrieves a variable number of bits from the input stream. Markers will not be read into the input bit buffer. Instead, an infinite number of all 1's will be returned when a marker is encountered.

+ inline uint jpeg_decoder::get_bits_no_markers(int num_bits)

+ {

+ if (!num_bits)

+ return 0;

+

+ uint i = m_bit_buf >> (32 - num_bits);

+

+ if ((m_bits_left -= num_bits) <= 0)

+ {

+ m_bit_buf <<= (num_bits += m_bits_left);

+

+ if ((m_in_buf_left < 2) || (m_pIn_buf_ofs[0] == 0xFF) || (m_pIn_buf_ofs[1] == 0xFF))

+ {

+ uint c1 = get_octet();

+ uint c2 = get_octet();

+ m_bit_buf |= (c1 << 8) | c2;

+ }

+ else

+ {

+ m_bit_buf |= ((uint)m_pIn_buf_ofs[0] << 8) | m_pIn_buf_ofs[1];

+ m_in_buf_left -= 2;

+ m_pIn_buf_ofs += 2;

+ }

+

+ m_bit_buf <<= -m_bits_left;

+

+ m_bits_left += 16;

+

+ JPGD_ASSERT(m_bits_left >= 0);

+ }

+ else

+ m_bit_buf <<= num_bits;

+

+ return i;

+ }

+

+ // Decodes a Huffman encoded symbol.

+ inline int jpeg_decoder::huff_decode(huff_tables *pH)

+ {

+ int symbol;

+

+ // Check first 8-bits: do we have a complete symbol?

+ if ((symbol = pH->look_up[m_bit_buf >> 24]) < 0)

+ {

+ // Decode more bits, use a tree traversal to find symbol.

+ int ofs = 23;

+ do

+ {

+ symbol = pH->tree[-(int)(symbol + ((m_bit_buf >> ofs) & 1))];

+ ofs--;

+ } while (symbol < 0);

+

+ get_bits_no_markers(8 + (23 - ofs));

+ }

+ else

+ get_bits_no_markers(pH->code_size[symbol]);

+

+ return symbol;

+ }

+

+ // Decodes a Huffman encoded symbol.

+ inline int jpeg_decoder::huff_decode(huff_tables *pH, int& extra_bits)

+ {

+ int symbol;

+

+ // Check first 8-bits: do we have a complete symbol?

+ if ((symbol = pH->look_up2[m_bit_buf >> 24]) < 0)

+ {

+ // Use a tree traversal to find symbol.

+ int ofs = 23;

+ do

+ {

+ symbol = pH->tree[-(int)(symbol + ((m_bit_buf >> ofs) & 1))];

+ ofs--;

+ } while (symbol < 0);

+

+ get_bits_no_markers(8 + (23 - ofs));

+

+ extra_bits = get_bits_no_markers(symbol & 0xF);

+ }

+ else

+ {

+ JPGD_ASSERT(((symbol >> 8) & 31) == pH->code_size[symbol & 255] + ((symbol & 0x8000) ? (symbol & 15) : 0));

+

+ if (symbol & 0x8000)

+ {

+ get_bits_no_markers((symbol >> 8) & 31);

+ extra_bits = symbol >> 16;

+ }

+ else

+ {

+ int code_size = (symbol >> 8) & 31;

+ int num_extra_bits = symbol & 0xF;

+ int bits = code_size + num_extra_bits;

+ if (bits <= (m_bits_left + 16))

+ extra_bits = get_bits_no_markers(bits) & ((1 << num_extra_bits) - 1);

+ else

+ {

+ get_bits_no_markers(code_size);

+ extra_bits = get_bits_no_markers(num_extra_bits);

+ }

+ }

+

+ symbol &= 0xFF;

+ }

+

+ return symbol;

+ }

+

+ // Tables and macro used to fully decode the DPCM differences.

+ static const int s_extend_test[16] = { 0, 0x0001, 0x0002, 0x0004, 0x0008, 0x0010, 0x0020, 0x0040, 0x0080, 0x0100, 0x0200, 0x0400, 0x0800, 0x1000, 0x2000, 0x4000 };

+ static const int s_extend_offset[16] = { 0, -1, -3, -7, -15, -31, -63, -127, -255, -511, -1023, -2047, -4095, -8191, -16383, -32767 };

+ static const int s_extend_mask[] = { 0, (1<<0), (1<<1), (1<<2), (1<<3), (1<<4), (1<<5), (1<<6), (1<<7), (1<<8), (1<<9), (1<<10), (1<<11), (1<<12), (1<<13), (1<<14), (1<<15), (1<<16) };

+#define HUFF_EXTEND(x,s) ((x) < s_extend_test[s] ? (x) + s_extend_offset[s] : (x))

+

+ // Clamps a value between 0-255.

+ inline uint8 jpeg_decoder::clamp(int i)

+ {

+ if (static_cast(i) > 255)

+ i = (((~i) >> 31) & 0xFF);

+

+ return static_cast(i);

+ }

+

+ namespace DCT_Upsample

+ {

+ struct Matrix44

+ {

+ typedef int Element_Type;

+ enum { NUM_ROWS = 4, NUM_COLS = 4 };

+

+ Element_Type v[NUM_ROWS][NUM_COLS];

+

+ inline int rows() const { return NUM_ROWS; }

+ inline int cols() const { return NUM_COLS; }

+

+ inline const Element_Type & at(int r, int c) const { return v[r][c]; }

+ inline Element_Type & at(int r, int c) { return v[r][c]; }

+

+ inline Matrix44() { }

+

+ inline Matrix44& operator += (const Matrix44& a)

+ {

+ for (int r = 0; r < NUM_ROWS; r++)

+ {

+ at(r, 0) += a.at(r, 0);

+ at(r, 1) += a.at(r, 1);

+ at(r, 2) += a.at(r, 2);

+ at(r, 3) += a.at(r, 3);

+ }

+ return *this;

+ }

+

+ inline Matrix44& operator -= (const Matrix44& a)

+ {

+ for (int r = 0; r < NUM_ROWS; r++)

+ {

+ at(r, 0) -= a.at(r, 0);

+ at(r, 1) -= a.at(r, 1);

+ at(r, 2) -= a.at(r, 2);

+ at(r, 3) -= a.at(r, 3);

+ }

+ return *this;

+ }

+

+ friend inline Matrix44 operator + (const Matrix44& a, const Matrix44& b)

+ {

+ Matrix44 ret;

+ for (int r = 0; r < NUM_ROWS; r++)

+ {

+ ret.at(r, 0) = a.at(r, 0) + b.at(r, 0);

+ ret.at(r, 1) = a.at(r, 1) + b.at(r, 1);

+ ret.at(r, 2) = a.at(r, 2) + b.at(r, 2);

+ ret.at(r, 3) = a.at(r, 3) + b.at(r, 3);

+ }

+ return ret;

+ }

+

+ friend inline Matrix44 operator - (const Matrix44& a, const Matrix44& b)

+ {

+ Matrix44 ret;

+ for (int r = 0; r < NUM_ROWS; r++)

+ {

+ ret.at(r, 0) = a.at(r, 0) - b.at(r, 0);

+ ret.at(r, 1) = a.at(r, 1) - b.at(r, 1);

+ ret.at(r, 2) = a.at(r, 2) - b.at(r, 2);

+ ret.at(r, 3) = a.at(r, 3) - b.at(r, 3);

+ }

+ return ret;

+ }

+

+ static inline void add_and_store(jpgd_block_t* pDst, const Matrix44& a, const Matrix44& b)

+ {

+ for (int r = 0; r < 4; r++)

+ {

+ pDst[0*8 + r] = static_cast(a.at(r, 0) + b.at(r, 0));

+ pDst[1*8 + r] = static_cast(a.at(r, 1) + b.at(r, 1));

+ pDst[2*8 + r] = static_cast(a.at(r, 2) + b.at(r, 2));

+ pDst[3*8 + r] = static_cast(a.at(r, 3) + b.at(r, 3));

+ }

+ }

+

+ static inline void sub_and_store(jpgd_block_t* pDst, const Matrix44& a, const Matrix44& b)

+ {

+ for (int r = 0; r < 4; r++)

+ {

+ pDst[0*8 + r] = static_cast(a.at(r, 0) - b.at(r, 0));

+ pDst[1*8 + r] = static_cast(a.at(r, 1) - b.at(r, 1));

+ pDst[2*8 + r] = static_cast(a.at(r, 2) - b.at(r, 2));

+ pDst[3*8 + r] = static_cast(a.at(r, 3) - b.at(r, 3));

+ }

+ }

+ };

+

+ const int FRACT_BITS = 10;

+ const int SCALE = 1 << FRACT_BITS;

+

+ typedef int Temp_Type;

+#define D(i) (((i) + (SCALE >> 1)) >> FRACT_BITS)

+#define F(i) ((int)((i) * SCALE + .5f))

+

+ // Any decent C++ compiler will optimize this at compile time to a 0, or an array access.

+#define AT(c, r) ((((c)>=NUM_COLS)||((r)>=NUM_ROWS)) ? 0 : pSrc[(c)+(r)*8])

+

+ // NUM_ROWS/NUM_COLS = # of non-zero rows/cols in input matrix

+ template

+ struct P_Q

+ {

+ static void calc(Matrix44& P, Matrix44& Q, const jpgd_block_t* pSrc)

+ {

+ // 4x8 = 4x8 times 8x8, matrix 0 is constant

+ const Temp_Type X000 = AT(0, 0);

+ const Temp_Type X001 = AT(0, 1);

+ const Temp_Type X002 = AT(0, 2);

+ const Temp_Type X003 = AT(0, 3);

+ const Temp_Type X004 = AT(0, 4);

+ const Temp_Type X005 = AT(0, 5);

+ const Temp_Type X006 = AT(0, 6);

+ const Temp_Type X007 = AT(0, 7);

+ const Temp_Type X010 = D(F(0.415735f) * AT(1, 0) + F(0.791065f) * AT(3, 0) + F(-0.352443f) * AT(5, 0) + F(0.277785f) * AT(7, 0));

+ const Temp_Type X011 = D(F(0.415735f) * AT(1, 1) + F(0.791065f) * AT(3, 1) + F(-0.352443f) * AT(5, 1) + F(0.277785f) * AT(7, 1));

+ const Temp_Type X012 = D(F(0.415735f) * AT(1, 2) + F(0.791065f) * AT(3, 2) + F(-0.352443f) * AT(5, 2) + F(0.277785f) * AT(7, 2));

+ const Temp_Type X013 = D(F(0.415735f) * AT(1, 3) + F(0.791065f) * AT(3, 3) + F(-0.352443f) * AT(5, 3) + F(0.277785f) * AT(7, 3));

+ const Temp_Type X014 = D(F(0.415735f) * AT(1, 4) + F(0.791065f) * AT(3, 4) + F(-0.352443f) * AT(5, 4) + F(0.277785f) * AT(7, 4));

+ const Temp_Type X015 = D(F(0.415735f) * AT(1, 5) + F(0.791065f) * AT(3, 5) + F(-0.352443f) * AT(5, 5) + F(0.277785f) * AT(7, 5));

+ const Temp_Type X016 = D(F(0.415735f) * AT(1, 6) + F(0.791065f) * AT(3, 6) + F(-0.352443f) * AT(5, 6) + F(0.277785f) * AT(7, 6));

+ const Temp_Type X017 = D(F(0.415735f) * AT(1, 7) + F(0.791065f) * AT(3, 7) + F(-0.352443f) * AT(5, 7) + F(0.277785f) * AT(7, 7));

+ const Temp_Type X020 = AT(4, 0);

+ const Temp_Type X021 = AT(4, 1);

+ const Temp_Type X022 = AT(4, 2);

+ const Temp_Type X023 = AT(4, 3);

+ const Temp_Type X024 = AT(4, 4);

+ const Temp_Type X025 = AT(4, 5);

+ const Temp_Type X026 = AT(4, 6);

+ const Temp_Type X027 = AT(4, 7);

+ const Temp_Type X030 = D(F(0.022887f) * AT(1, 0) + F(-0.097545f) * AT(3, 0) + F(0.490393f) * AT(5, 0) + F(0.865723f) * AT(7, 0));

+ const Temp_Type X031 = D(F(0.022887f) * AT(1, 1) + F(-0.097545f) * AT(3, 1) + F(0.490393f) * AT(5, 1) + F(0.865723f) * AT(7, 1));

+ const Temp_Type X032 = D(F(0.022887f) * AT(1, 2) + F(-0.097545f) * AT(3, 2) + F(0.490393f) * AT(5, 2) + F(0.865723f) * AT(7, 2));

+ const Temp_Type X033 = D(F(0.022887f) * AT(1, 3) + F(-0.097545f) * AT(3, 3) + F(0.490393f) * AT(5, 3) + F(0.865723f) * AT(7, 3));

+ const Temp_Type X034 = D(F(0.022887f) * AT(1, 4) + F(-0.097545f) * AT(3, 4) + F(0.490393f) * AT(5, 4) + F(0.865723f) * AT(7, 4));

+ const Temp_Type X035 = D(F(0.022887f) * AT(1, 5) + F(-0.097545f) * AT(3, 5) + F(0.490393f) * AT(5, 5) + F(0.865723f) * AT(7, 5));

+ const Temp_Type X036 = D(F(0.022887f) * AT(1, 6) + F(-0.097545f) * AT(3, 6) + F(0.490393f) * AT(5, 6) + F(0.865723f) * AT(7, 6));

+ const Temp_Type X037 = D(F(0.022887f) * AT(1, 7) + F(-0.097545f) * AT(3, 7) + F(0.490393f) * AT(5, 7) + F(0.865723f) * AT(7, 7));

+

+ // 4x4 = 4x8 times 8x4, matrix 1 is constant

+ P.at(0, 0) = X000;

+ P.at(0, 1) = D(X001 * F(0.415735f) + X003 * F(0.791065f) + X005 * F(-0.352443f) + X007 * F(0.277785f));

+ P.at(0, 2) = X004;

+ P.at(0, 3) = D(X001 * F(0.022887f) + X003 * F(-0.097545f) + X005 * F(0.490393f) + X007 * F(0.865723f));

+ P.at(1, 0) = X010;

+ P.at(1, 1) = D(X011 * F(0.415735f) + X013 * F(0.791065f) + X015 * F(-0.352443f) + X017 * F(0.277785f));

+ P.at(1, 2) = X014;

+ P.at(1, 3) = D(X011 * F(0.022887f) + X013 * F(-0.097545f) + X015 * F(0.490393f) + X017 * F(0.865723f));

+ P.at(2, 0) = X020;

+ P.at(2, 1) = D(X021 * F(0.415735f) + X023 * F(0.791065f) + X025 * F(-0.352443f) + X027 * F(0.277785f));

+ P.at(2, 2) = X024;

+ P.at(2, 3) = D(X021 * F(0.022887f) + X023 * F(-0.097545f) + X025 * F(0.490393f) + X027 * F(0.865723f));

+ P.at(3, 0) = X030;

+ P.at(3, 1) = D(X031 * F(0.415735f) + X033 * F(0.791065f) + X035 * F(-0.352443f) + X037 * F(0.277785f));

+ P.at(3, 2) = X034;

+ P.at(3, 3) = D(X031 * F(0.022887f) + X033 * F(-0.097545f) + X035 * F(0.490393f) + X037 * F(0.865723f));

+ // 40 muls 24 adds

+

+ // 4x4 = 4x8 times 8x4, matrix 1 is constant

+ Q.at(0, 0) = D(X001 * F(0.906127f) + X003 * F(-0.318190f) + X005 * F(0.212608f) + X007 * F(-0.180240f));

+ Q.at(0, 1) = X002;

+ Q.at(0, 2) = D(X001 * F(-0.074658f) + X003 * F(0.513280f) + X005 * F(0.768178f) + X007 * F(-0.375330f));

+ Q.at(0, 3) = X006;

+ Q.at(1, 0) = D(X011 * F(0.906127f) + X013 * F(-0.318190f) + X015 * F(0.212608f) + X017 * F(-0.180240f));

+ Q.at(1, 1) = X012;

+ Q.at(1, 2) = D(X011 * F(-0.074658f) + X013 * F(0.513280f) + X015 * F(0.768178f) + X017 * F(-0.375330f));

+ Q.at(1, 3) = X016;

+ Q.at(2, 0) = D(X021 * F(0.906127f) + X023 * F(-0.318190f) + X025 * F(0.212608f) + X027 * F(-0.180240f));

+ Q.at(2, 1) = X022;

+ Q.at(2, 2) = D(X021 * F(-0.074658f) + X023 * F(0.513280f) + X025 * F(0.768178f) + X027 * F(-0.375330f));

+ Q.at(2, 3) = X026;

+ Q.at(3, 0) = D(X031 * F(0.906127f) + X033 * F(-0.318190f) + X035 * F(0.212608f) + X037 * F(-0.180240f));

+ Q.at(3, 1) = X032;

+ Q.at(3, 2) = D(X031 * F(-0.074658f) + X033 * F(0.513280f) + X035 * F(0.768178f) + X037 * F(-0.375330f));

+ Q.at(3, 3) = X036;

+ // 40 muls 24 adds

+ }

+ };

+

+ template

+ struct R_S

+ {

+ static void calc(Matrix44& R, Matrix44& S, const jpgd_block_t* pSrc)

+ {

+ // 4x8 = 4x8 times 8x8, matrix 0 is constant

+ const Temp_Type X100 = D(F(0.906127f) * AT(1, 0) + F(-0.318190f) * AT(3, 0) + F(0.212608f) * AT(5, 0) + F(-0.180240f) * AT(7, 0));

+ const Temp_Type X101 = D(F(0.906127f) * AT(1, 1) + F(-0.318190f) * AT(3, 1) + F(0.212608f) * AT(5, 1) + F(-0.180240f) * AT(7, 1));

+ const Temp_Type X102 = D(F(0.906127f) * AT(1, 2) + F(-0.318190f) * AT(3, 2) + F(0.212608f) * AT(5, 2) + F(-0.180240f) * AT(7, 2));

+ const Temp_Type X103 = D(F(0.906127f) * AT(1, 3) + F(-0.318190f) * AT(3, 3) + F(0.212608f) * AT(5, 3) + F(-0.180240f) * AT(7, 3));

+ const Temp_Type X104 = D(F(0.906127f) * AT(1, 4) + F(-0.318190f) * AT(3, 4) + F(0.212608f) * AT(5, 4) + F(-0.180240f) * AT(7, 4));

+ const Temp_Type X105 = D(F(0.906127f) * AT(1, 5) + F(-0.318190f) * AT(3, 5) + F(0.212608f) * AT(5, 5) + F(-0.180240f) * AT(7, 5));

+ const Temp_Type X106 = D(F(0.906127f) * AT(1, 6) + F(-0.318190f) * AT(3, 6) + F(0.212608f) * AT(5, 6) + F(-0.180240f) * AT(7, 6));

+ const Temp_Type X107 = D(F(0.906127f) * AT(1, 7) + F(-0.318190f) * AT(3, 7) + F(0.212608f) * AT(5, 7) + F(-0.180240f) * AT(7, 7));

+ const Temp_Type X110 = AT(2, 0);

+ const Temp_Type X111 = AT(2, 1);

+ const Temp_Type X112 = AT(2, 2);

+ const Temp_Type X113 = AT(2, 3);

+ const Temp_Type X114 = AT(2, 4);

+ const Temp_Type X115 = AT(2, 5);

+ const Temp_Type X116 = AT(2, 6);

+ const Temp_Type X117 = AT(2, 7);

+ const Temp_Type X120 = D(F(-0.074658f) * AT(1, 0) + F(0.513280f) * AT(3, 0) + F(0.768178f) * AT(5, 0) + F(-0.375330f) * AT(7, 0));

+ const Temp_Type X121 = D(F(-0.074658f) * AT(1, 1) + F(0.513280f) * AT(3, 1) + F(0.768178f) * AT(5, 1) + F(-0.375330f) * AT(7, 1));

+ const Temp_Type X122 = D(F(-0.074658f) * AT(1, 2) + F(0.513280f) * AT(3, 2) + F(0.768178f) * AT(5, 2) + F(-0.375330f) * AT(7, 2));

+ const Temp_Type X123 = D(F(-0.074658f) * AT(1, 3) + F(0.513280f) * AT(3, 3) + F(0.768178f) * AT(5, 3) + F(-0.375330f) * AT(7, 3));

+ const Temp_Type X124 = D(F(-0.074658f) * AT(1, 4) + F(0.513280f) * AT(3, 4) + F(0.768178f) * AT(5, 4) + F(-0.375330f) * AT(7, 4));

+ const Temp_Type X125 = D(F(-0.074658f) * AT(1, 5) + F(0.513280f) * AT(3, 5) + F(0.768178f) * AT(5, 5) + F(-0.375330f) * AT(7, 5));

+ const Temp_Type X126 = D(F(-0.074658f) * AT(1, 6) + F(0.513280f) * AT(3, 6) + F(0.768178f) * AT(5, 6) + F(-0.375330f) * AT(7, 6));

+ const Temp_Type X127 = D(F(-0.074658f) * AT(1, 7) + F(0.513280f) * AT(3, 7) + F(0.768178f) * AT(5, 7) + F(-0.375330f) * AT(7, 7));

+ const Temp_Type X130 = AT(6, 0);

+ const Temp_Type X131 = AT(6, 1);

+ const Temp_Type X132 = AT(6, 2);

+ const Temp_Type X133 = AT(6, 3);

+ const Temp_Type X134 = AT(6, 4);

+ const Temp_Type X135 = AT(6, 5);

+ const Temp_Type X136 = AT(6, 6);

+ const Temp_Type X137 = AT(6, 7);

+ // 80 muls 48 adds

+

+ // 4x4 = 4x8 times 8x4, matrix 1 is constant

+ R.at(0, 0) = X100;

+ R.at(0, 1) = D(X101 * F(0.415735f) + X103 * F(0.791065f) + X105 * F(-0.352443f) + X107 * F(0.277785f));

+ R.at(0, 2) = X104;

+ R.at(0, 3) = D(X101 * F(0.022887f) + X103 * F(-0.097545f) + X105 * F(0.490393f) + X107 * F(0.865723f));

+ R.at(1, 0) = X110;

+ R.at(1, 1) = D(X111 * F(0.415735f) + X113 * F(0.791065f) + X115 * F(-0.352443f) + X117 * F(0.277785f));

+ R.at(1, 2) = X114;

+ R.at(1, 3) = D(X111 * F(0.022887f) + X113 * F(-0.097545f) + X115 * F(0.490393f) + X117 * F(0.865723f));

+ R.at(2, 0) = X120;

+ R.at(2, 1) = D(X121 * F(0.415735f) + X123 * F(0.791065f) + X125 * F(-0.352443f) + X127 * F(0.277785f));

+ R.at(2, 2) = X124;

+ R.at(2, 3) = D(X121 * F(0.022887f) + X123 * F(-0.097545f) + X125 * F(0.490393f) + X127 * F(0.865723f));

+ R.at(3, 0) = X130;

+ R.at(3, 1) = D(X131 * F(0.415735f) + X133 * F(0.791065f) + X135 * F(-0.352443f) + X137 * F(0.277785f));

+ R.at(3, 2) = X134;

+ R.at(3, 3) = D(X131 * F(0.022887f) + X133 * F(-0.097545f) + X135 * F(0.490393f) + X137 * F(0.865723f));

+ // 40 muls 24 adds

+ // 4x4 = 4x8 times 8x4, matrix 1 is constant

+ S.at(0, 0) = D(X101 * F(0.906127f) + X103 * F(-0.318190f) + X105 * F(0.212608f) + X107 * F(-0.180240f));

+ S.at(0, 1) = X102;

+ S.at(0, 2) = D(X101 * F(-0.074658f) + X103 * F(0.513280f) + X105 * F(0.768178f) + X107 * F(-0.375330f));

+ S.at(0, 3) = X106;

+ S.at(1, 0) = D(X111 * F(0.906127f) + X113 * F(-0.318190f) + X115 * F(0.212608f) + X117 * F(-0.180240f));

+ S.at(1, 1) = X112;

+ S.at(1, 2) = D(X111 * F(-0.074658f) + X113 * F(0.513280f) + X115 * F(0.768178f) + X117 * F(-0.375330f));

+ S.at(1, 3) = X116;

+ S.at(2, 0) = D(X121 * F(0.906127f) + X123 * F(-0.318190f) + X125 * F(0.212608f) + X127 * F(-0.180240f));

+ S.at(2, 1) = X122;

+ S.at(2, 2) = D(X121 * F(-0.074658f) + X123 * F(0.513280f) + X125 * F(0.768178f) + X127 * F(-0.375330f));

+ S.at(2, 3) = X126;

+ S.at(3, 0) = D(X131 * F(0.906127f) + X133 * F(-0.318190f) + X135 * F(0.212608f) + X137 * F(-0.180240f));

+ S.at(3, 1) = X132;

+ S.at(3, 2) = D(X131 * F(-0.074658f) + X133 * F(0.513280f) + X135 * F(0.768178f) + X137 * F(-0.375330f));

+ S.at(3, 3) = X136;

+ // 40 muls 24 adds

+ }

+ };

+ } // end namespace DCT_Upsample

+

+ // Unconditionally frees all allocated m_blocks.

+ void jpeg_decoder::free_all_blocks()

+ {

+ m_pStream = NULL;

+ for (mem_block *b = m_pMem_blocks; b; )

+ {

+ mem_block *n = b->m_pNext;

+ jpgd_free(b);

+ b = n;

+ }

+ m_pMem_blocks = NULL;

+ }

+

+ // This method handles all errors.

+ // It could easily be changed to use C++ exceptions.

+ void jpeg_decoder::stop_decoding(jpgd_status status)

+ {

+ m_error_code = status;

+ free_all_blocks();

+ longjmp(m_jmp_state, status);

+

+ // we shouldn't get here as longjmp shouldn't return, but we put it here to make it explicit

+ // that this function doesn't return, otherwise we get this error:

+ //

+ // error : function declared 'noreturn' should not return

+ exit(1);

+ }

+

+ void *jpeg_decoder::alloc(size_t nSize, bool zero)

+ {

+ nSize = (JPGD_MAX(nSize, 1) + 3) & ~3;

+ char *rv = NULL;

+ for (mem_block *b = m_pMem_blocks; b; b = b->m_pNext)

+ {

+ if ((b->m_used_count + nSize) <= b->m_size)

+ {

+ rv = b->m_data + b->m_used_count;

+ b->m_used_count += nSize;

+ break;

+ }

+ }

+ if (!rv)

+ {

+ int capacity = JPGD_MAX(32768 - 256, (nSize + 2047) & ~2047);

+ mem_block *b = (mem_block*)jpgd_malloc(sizeof(mem_block) + capacity);

+ if (!b) stop_decoding(JPGD_NOTENOUGHMEM);

+ b->m_pNext = m_pMem_blocks; m_pMem_blocks = b;

+ b->m_used_count = nSize;

+ b->m_size = capacity;

+ rv = b->m_data;

+ }

+ if (zero) memset(rv, 0, nSize);

+ return rv;

+ }

+

+ void jpeg_decoder::word_clear(void *p, uint16 c, uint n)

+ {

+ uint8 *pD = (uint8*)p;

+ const uint8 l = c & 0xFF, h = (c >> 8) & 0xFF;

+ while (n)

+ {

+ pD[0] = l; pD[1] = h; pD += 2;

+ n--;

+ }

+ }

+

+ // Refill the input buffer.

+ // This method will sit in a loop until (A) the buffer is full or (B)

+ // the stream's read() method reports and end of file condition.

+ void jpeg_decoder::prep_in_buffer()

+ {

+ m_in_buf_left = 0;

+ m_pIn_buf_ofs = m_in_buf;

+

+ if (m_eof_flag)

+ return;

+

+ do

+ {

+ int bytes_read = m_pStream->read(m_in_buf + m_in_buf_left, JPGD_IN_BUF_SIZE - m_in_buf_left, &m_eof_flag);

+ if (bytes_read == -1)

+ stop_decoding(JPGD_STREAM_READ);

+

+ m_in_buf_left += bytes_read;

+ } while ((m_in_buf_left < JPGD_IN_BUF_SIZE) && (!m_eof_flag));

+

+ m_total_bytes_read += m_in_buf_left;

+

+ // Pad the end of the block with M_EOI (prevents the decompressor from going off the rails if the stream is invalid).

+ // (This dates way back to when this decompressor was written in C/asm, and the all-asm Huffman decoder did some fancy things to increase perf.)

+ word_clear(m_pIn_buf_ofs + m_in_buf_left, 0xD9FF, 64);

+ }

+

+ // Read a Huffman code table.

+ void jpeg_decoder::read_dht_marker()

+ {

+ int i, index, count;

+ uint8 huff_num[17];

+ uint8 huff_val[256];

+

+ uint num_left = get_bits(16);

+

+ if (num_left < 2)

+ stop_decoding(JPGD_BAD_DHT_MARKER);

+

+ num_left -= 2;

+

+ while (num_left)

+ {

+ index = get_bits(8);

+

+ huff_num[0] = 0;

+

+ count = 0;

+

+ for (i = 1; i <= 16; i++)

+ {

+ huff_num[i] = static_cast(get_bits(8));

+ count += huff_num[i];

+ }

+

+ if (count > 255)

+ stop_decoding(JPGD_BAD_DHT_COUNTS);

+

+ for (i = 0; i < count; i++)

+ huff_val[i] = static_cast(get_bits(8));

+

+ i = 1 + 16 + count;

+

+ if (num_left < (uint)i)

+ stop_decoding(JPGD_BAD_DHT_MARKER);

+

+ num_left -= i;

+

+ if ((index & 0x10) > 0x10)

+ stop_decoding(JPGD_BAD_DHT_INDEX);

+

+ index = (index & 0x0F) + ((index & 0x10) >> 4) * (JPGD_MAX_HUFF_TABLES >> 1);

+

+ if (index >= JPGD_MAX_HUFF_TABLES)

+ stop_decoding(JPGD_BAD_DHT_INDEX);

+

+ if (!m_huff_num[index])

+ m_huff_num[index] = (uint8 *)alloc(17);

+

+ if (!m_huff_val[index])

+ m_huff_val[index] = (uint8 *)alloc(256);

+

+ m_huff_ac[index] = (index & 0x10) != 0;

+ memcpy(m_huff_num[index], huff_num, 17);

+ memcpy(m_huff_val[index], huff_val, 256);

+ }

+ }

+

+ // Read a quantization table.

+ void jpeg_decoder::read_dqt_marker()

+ {

+ int n, i, prec;

+ uint num_left;

+ uint temp;

+

+ num_left = get_bits(16);

+

+ if (num_left < 2)

+ stop_decoding(JPGD_BAD_DQT_MARKER);

+

+ num_left -= 2;

+

+ while (num_left)

+ {

+ n = get_bits(8);

+ prec = n >> 4;

+ n &= 0x0F;

+

+ if (n >= JPGD_MAX_QUANT_TABLES)

+ stop_decoding(JPGD_BAD_DQT_TABLE);

+

+ if (!m_quant[n])

+ m_quant[n] = (jpgd_quant_t *)alloc(64 * sizeof(jpgd_quant_t));

+

+ // read quantization entries, in zag order

+ for (i = 0; i < 64; i++)

+ {

+ temp = get_bits(8);

+

+ if (prec)

+ temp = (temp << 8) + get_bits(8);

+

+ m_quant[n][i] = static_cast(temp);

+ }

+

+ i = 64 + 1;

+

+ if (prec)

+ i += 64;

+

+ if (num_left < (uint)i)

+ stop_decoding(JPGD_BAD_DQT_LENGTH);

+

+ num_left -= i;

+ }

+ }

+

+ // Read the start of frame (SOF) marker.

+ void jpeg_decoder::read_sof_marker()

+ {

+ int i;

+ uint num_left;

+

+ num_left = get_bits(16);

+

+ if (get_bits(8) != 8) /* precision: sorry, only 8-bit precision is supported right now */

+ stop_decoding(JPGD_BAD_PRECISION);

+

+ m_image_y_size = get_bits(16);

+

+ if ((m_image_y_size < 1) || (m_image_y_size > JPGD_MAX_HEIGHT))

+ stop_decoding(JPGD_BAD_HEIGHT);

+

+ m_image_x_size = get_bits(16);

+

+ if ((m_image_x_size < 1) || (m_image_x_size > JPGD_MAX_WIDTH))

+ stop_decoding(JPGD_BAD_WIDTH);

+

+ m_comps_in_frame = get_bits(8);

+

+ if (m_comps_in_frame > JPGD_MAX_COMPONENTS)

+ stop_decoding(JPGD_TOO_MANY_COMPONENTS);

+

+ if (num_left != (uint)(m_comps_in_frame * 3 + 8))

+ stop_decoding(JPGD_BAD_SOF_LENGTH);

+

+ for (i = 0; i < m_comps_in_frame; i++)

+ {

+ m_comp_ident[i] = get_bits(8);

+ m_comp_h_samp[i] = get_bits(4);

+ m_comp_v_samp[i] = get_bits(4);

+ m_comp_quant[i] = get_bits(8);

+ }

+ }

+

+ // Used to skip unrecognized markers.

+ void jpeg_decoder::skip_variable_marker()

+ {

+ uint num_left;

+

+ num_left = get_bits(16);

+

+ if (num_left < 2)

+ stop_decoding(JPGD_BAD_VARIABLE_MARKER);

+

+ num_left -= 2;

+

+ while (num_left)

+ {

+ get_bits(8);

+ num_left--;

+ }

+ }

+

+ // Read a define restart interval (DRI) marker.

+ void jpeg_decoder::read_dri_marker()

+ {

+ if (get_bits(16) != 4)

+ stop_decoding(JPGD_BAD_DRI_LENGTH);

+

+ m_restart_interval = get_bits(16);

+ }

+

+ // Read a start of scan (SOS) marker.

+ void jpeg_decoder::read_sos_marker()

+ {

+ uint num_left;

+ int i, ci, n, c, cc;

+

+ num_left = get_bits(16);

+

+ n = get_bits(8);

+

+ m_comps_in_scan = n;

+

+ num_left -= 3;

+

+ if ( (num_left != (uint)(n * 2 + 3)) || (n < 1) || (n > JPGD_MAX_COMPS_IN_SCAN) )

+ stop_decoding(JPGD_BAD_SOS_LENGTH);

+

+ for (i = 0; i < n; i++)

+ {

+ cc = get_bits(8);

+ c = get_bits(8);

+ num_left -= 2;

+

+ for (ci = 0; ci < m_comps_in_frame; ci++)

+ if (cc == m_comp_ident[ci])

+ break;

+

+ if (ci >= m_comps_in_frame)

+ stop_decoding(JPGD_BAD_SOS_COMP_ID);

+

+ m_comp_list[i] = ci;

+ m_comp_dc_tab[ci] = (c >> 4) & 15;

+ m_comp_ac_tab[ci] = (c & 15) + (JPGD_MAX_HUFF_TABLES >> 1);

+ }

+

+ m_spectral_start = get_bits(8);

+ m_spectral_end = get_bits(8);

+ m_successive_high = get_bits(4);

+ m_successive_low = get_bits(4);

+

+ if (!m_progressive_flag)

+ {

+ m_spectral_start = 0;

+ m_spectral_end = 63;

+ }

+

+ num_left -= 3;

+

+ while (num_left) /* read past whatever is num_left */

+ {

+ get_bits(8);

+ num_left--;

+ }

+ }

+

+ // Finds the next marker.

+ int jpeg_decoder::next_marker()

+ {

+ uint c, bytes;

+

+ bytes = 0;

+

+ do

+ {

+ do

+ {

+ bytes++;

+ c = get_bits(8);

+ } while (c != 0xFF);

+

+ do

+ {

+ c = get_bits(8);

+ } while (c == 0xFF);

+

+ } while (c == 0);

+

+ // If bytes > 0 here, there where extra bytes before the marker (not good).

+

+ return c;

+ }

+

+ // Process markers. Returns when an SOFx, SOI, EOI, or SOS marker is

+ // encountered.

+ int jpeg_decoder::process_markers()

+ {

+ int c;

+

+ for ( ; ; )

+ {

+ c = next_marker();

+

+ switch (c)

+ {

+ case M_SOF0:

+ case M_SOF1:

+ case M_SOF2:

+ case M_SOF3:

+ case M_SOF5:

+ case M_SOF6:

+ case M_SOF7:

+ // case M_JPG:

+ case M_SOF9:

+ case M_SOF10:

+ case M_SOF11:

+ case M_SOF13:

+ case M_SOF14:

+ case M_SOF15:

+ case M_SOI:

+ case M_EOI:

+ case M_SOS:

+ {

+ return c;

+ }

+ case M_DHT:

+ {

+ read_dht_marker();

+ break;

+ }

+ // No arithmitic support - dumb patents!

+ case M_DAC:

+ {

+ stop_decoding(JPGD_NO_ARITHMITIC_SUPPORT);

+ break;

+ }

+ case M_DQT:

+ {

+ read_dqt_marker();

+ break;

+ }

+ case M_DRI:

+ {

+ read_dri_marker();

+ break;

+ }

+ //case M_APP0: /* no need to read the JFIF marker */

+

+ case M_JPG:

+ case M_RST0: /* no parameters */

+ case M_RST1:

+ case M_RST2:

+ case M_RST3:

+ case M_RST4:

+ case M_RST5:

+ case M_RST6:

+ case M_RST7:

+ case M_TEM:

+ {

+ stop_decoding(JPGD_UNEXPECTED_MARKER);

+ break;

+ }

+ default: /* must be DNL, DHP, EXP, APPn, JPGn, COM, or RESn or APP0 */

+ {

+ skip_variable_marker();

+ break;

+ }

+ }

+ }

+ }

+

+ // Finds the start of image (SOI) marker.

+ // This code is rather defensive: it only checks the first 512 bytes to avoid

+ // false positives.

+ void jpeg_decoder::locate_soi_marker()

+ {

+ uint lastchar, thischar;

+ uint bytesleft;

+

+ lastchar = get_bits(8);

+

+ thischar = get_bits(8);

+

+ /* ok if it's a normal JPEG file without a special header */

+

+ if ((lastchar == 0xFF) && (thischar == M_SOI))

+ return;

+

+ bytesleft = 4096; //512;

+

+ for ( ; ; )

+ {

+ if (--bytesleft == 0)

+ stop_decoding(JPGD_NOT_JPEG);

+

+ lastchar = thischar;

+

+ thischar = get_bits(8);

+

+ if (lastchar == 0xFF)

+ {

+ if (thischar == M_SOI)

+ break;

+ else if (thischar == M_EOI) // get_bits will keep returning M_EOI if we read past the end

+ stop_decoding(JPGD_NOT_JPEG);

+ }

+ }

+

+ // Check the next character after marker: if it's not 0xFF, it can't be the start of the next marker, so the file is bad.

+ thischar = (m_bit_buf >> 24) & 0xFF;

+

+ if (thischar != 0xFF)

+ stop_decoding(JPGD_NOT_JPEG);

+ }

+

+ // Find a start of frame (SOF) marker.

+ void jpeg_decoder::locate_sof_marker()

+ {

+ locate_soi_marker();

+

+ int c = process_markers();

+

+ switch (c)

+ {

+ case M_SOF2:

+ m_progressive_flag = JPGD_TRUE;

+ case M_SOF0: /* baseline DCT */

+ case M_SOF1: /* extended sequential DCT */

+ {

+ read_sof_marker();

+ break;

+ }

+ case M_SOF9: /* Arithmitic coding */

+ {

+ stop_decoding(JPGD_NO_ARITHMITIC_SUPPORT);

+ break;

+ }

+ default:

+ {

+ stop_decoding(JPGD_UNSUPPORTED_MARKER);

+ break;

+ }

+ }

+ }

+

+ // Find a start of scan (SOS) marker.

+ int jpeg_decoder::locate_sos_marker()

+ {

+ int c;

+

+ c = process_markers();

+

+ if (c == M_EOI)

+ return JPGD_FALSE;

+ else if (c != M_SOS)

+ stop_decoding(JPGD_UNEXPECTED_MARKER);

+

+ read_sos_marker();

+

+ return JPGD_TRUE;

+ }

+

+ // Reset everything to default/uninitialized state.

+ void jpeg_decoder::init(jpeg_decoder_stream *pStream)

+ {

+ m_pMem_blocks = NULL;

+ m_error_code = JPGD_SUCCESS;

+ m_ready_flag = false;

+ m_image_x_size = m_image_y_size = 0;

+ m_pStream = pStream;

+ m_progressive_flag = JPGD_FALSE;

+

+ memset(m_huff_ac, 0, sizeof(m_huff_ac));

+ memset(m_huff_num, 0, sizeof(m_huff_num));

+ memset(m_huff_val, 0, sizeof(m_huff_val));

+ memset(m_quant, 0, sizeof(m_quant));

+

+ m_scan_type = 0;

+ m_comps_in_frame = 0;

+

+ memset(m_comp_h_samp, 0, sizeof(m_comp_h_samp));

+ memset(m_comp_v_samp, 0, sizeof(m_comp_v_samp));

+ memset(m_comp_quant, 0, sizeof(m_comp_quant));

+ memset(m_comp_ident, 0, sizeof(m_comp_ident));

+ memset(m_comp_h_blocks, 0, sizeof(m_comp_h_blocks));

+ memset(m_comp_v_blocks, 0, sizeof(m_comp_v_blocks));

+

+ m_comps_in_scan = 0;

+ memset(m_comp_list, 0, sizeof(m_comp_list));

+ memset(m_comp_dc_tab, 0, sizeof(m_comp_dc_tab));

+ memset(m_comp_ac_tab, 0, sizeof(m_comp_ac_tab));

+

+ m_spectral_start = 0;

+ m_spectral_end = 0;

+ m_successive_low = 0;

+ m_successive_high = 0;

+ m_max_mcu_x_size = 0;

+ m_max_mcu_y_size = 0;

+ m_blocks_per_mcu = 0;

+ m_max_blocks_per_row = 0;

+ m_mcus_per_row = 0;

+ m_mcus_per_col = 0;

+ m_expanded_blocks_per_component = 0;

+ m_expanded_blocks_per_mcu = 0;

+ m_expanded_blocks_per_row = 0;

+ m_freq_domain_chroma_upsample = false;

+

+ memset(m_mcu_org, 0, sizeof(m_mcu_org));

+

+ m_total_lines_left = 0;

+ m_mcu_lines_left = 0;

+ m_real_dest_bytes_per_scan_line = 0;

+ m_dest_bytes_per_scan_line = 0;

+ m_dest_bytes_per_pixel = 0;

+

+ memset(m_pHuff_tabs, 0, sizeof(m_pHuff_tabs));

+

+ memset(m_dc_coeffs, 0, sizeof(m_dc_coeffs));

+ memset(m_ac_coeffs, 0, sizeof(m_ac_coeffs));

+ memset(m_block_y_mcu, 0, sizeof(m_block_y_mcu));

+

+ m_eob_run = 0;

+

+ memset(m_block_y_mcu, 0, sizeof(m_block_y_mcu));

+

+ m_pIn_buf_ofs = m_in_buf;

+ m_in_buf_left = 0;

+ m_eof_flag = false;

+ m_tem_flag = 0;

+

+ memset(m_in_buf_pad_start, 0, sizeof(m_in_buf_pad_start));

+ memset(m_in_buf, 0, sizeof(m_in_buf));

+ memset(m_in_buf_pad_end, 0, sizeof(m_in_buf_pad_end));

+

+ m_restart_interval = 0;

+ m_restarts_left = 0;

+ m_next_restart_num = 0;

+

+ m_max_mcus_per_row = 0;

+ m_max_blocks_per_mcu = 0;

+ m_max_mcus_per_col = 0;

+

+ memset(m_last_dc_val, 0, sizeof(m_last_dc_val));

+ m_pMCU_coefficients = NULL;

+ m_pSample_buf = NULL;

+

+ m_total_bytes_read = 0;

+

+ m_pScan_line_0 = NULL;

+ m_pScan_line_1 = NULL;

+

+ // Ready the input buffer.

+ prep_in_buffer();

+

+ // Prime the bit buffer.

+ m_bits_left = 16;

+ m_bit_buf = 0;

+

+ get_bits(16);

+ get_bits(16);

+

+ for (int i = 0; i < JPGD_MAX_BLOCKS_PER_MCU; i++)

+ m_mcu_block_max_zag[i] = 64;

+ }

+

+#define SCALEBITS 16

+#define ONE_HALF ((int) 1 << (SCALEBITS-1))

+#define FIX(x) ((int) ((x) * (1L<> SCALEBITS;

+ m_cbb[i] = ( FIX(1.77200f) * k + ONE_HALF) >> SCALEBITS;

+ m_crg[i] = (-FIX(0.71414f)) * k;

+ m_cbg[i] = (-FIX(0.34414f)) * k + ONE_HALF;

+ }

+ }

+

+ // This method throws back into the stream any bytes that where read

+ // into the bit buffer during initial marker scanning.

+ void jpeg_decoder::fix_in_buffer()

+ {

+ // In case any 0xFF's where pulled into the buffer during marker scanning.

+ JPGD_ASSERT((m_bits_left & 7) == 0);

+

+ if (m_bits_left == 16)

+ stuff_char( (uint8)(m_bit_buf & 0xFF));

+

+ if (m_bits_left >= 8)

+ stuff_char( (uint8)((m_bit_buf >> 8) & 0xFF));

+

+ stuff_char((uint8)((m_bit_buf >> 16) & 0xFF));

+ stuff_char((uint8)((m_bit_buf >> 24) & 0xFF));

+

+ m_bits_left = 16;

+ get_bits_no_markers(16);

+ get_bits_no_markers(16);

+ }

+

+ void jpeg_decoder::transform_mcu(int mcu_row)

+ {

+ jpgd_block_t* pSrc_ptr = m_pMCU_coefficients;

+ uint8* pDst_ptr = m_pSample_buf + mcu_row * m_blocks_per_mcu * 64;

+

+ for (int mcu_block = 0; mcu_block < m_blocks_per_mcu; mcu_block++)

+ {