Compare commits

18 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

3103817990 | ||

|

|

88aaf310c4 | ||

|

|

e8d86a3242 | ||

|

|

37fd1b1c97 | ||

|

|

e3e313beab | ||

|

|

380a8a9d4d | ||

|

|

d00f6bb1a6 | ||

|

|

f8a44a82a9 | ||

|

|

2be45c386d | ||

|

|

2b7e8b9278 | ||

|

|

8590014462 | ||

|

|

8215caddb2 | ||

|

|

93a9d27b1e | ||

|

|

e128523103 | ||

|

|

da0caab4cf | ||

|

|

6e9813565a | ||

|

|

01cc409f99 | ||

|

|

3438f8f291 |

56

README.md

56

README.md

@ -2,7 +2,42 @@

|

||||

|

||||

**如果喜欢这个项目,请给它一个Star**

|

||||

|

||||

## 使用docker

|

||||

- 支持GPT输出的markdown表格

|

||||

<div align="center">

|

||||

<img src="demo2.jpg" width="500" >

|

||||

</div>

|

||||

|

||||

- 如果输出包含公式,会同时以tex形式和渲染形式显示,方便复制和阅读

|

||||

<div align="center">

|

||||

<img src="demo.jpg" width="500" >

|

||||

</div>

|

||||

|

||||

- 所有按钮都通过读取functional.py动态生成,可随意加自定义功能,解放粘贴板

|

||||

<div align="center">

|

||||

<img src="公式.gif" width="700" >

|

||||

</div>

|

||||

|

||||

- 代码的显示自然也不在话下 https://www.bilibili.com/video/BV1F24y147PD/

|

||||

<div align="center">

|

||||

<img src="润色.gif" width="700" >

|

||||

</div>

|

||||

|

||||

## 直接运行 (Windows or Linux)

|

||||

|

||||

```

|

||||

# 下载项目

|

||||

git clone https://github.com/binary-husky/chatgpt_academic.git

|

||||

cd chatgpt_academic

|

||||

# 配置 海外Proxy 和 OpenAI API KEY

|

||||

config.py

|

||||

# 安装依赖

|

||||

python -m pip install -r requirements.txt

|

||||

# 运行

|

||||

python main.py

|

||||

```

|

||||

|

||||

|

||||

## 使用docker (Linux)

|

||||

|

||||

``` sh

|

||||

# 下载项目

|

||||

@ -17,6 +52,25 @@ docker run --rm -it --net=host gpt-academic

|

||||

|

||||

```

|

||||

|

||||

|

||||

## 自定义新的便捷按钮

|

||||

打开functional.py,只需看一眼就知道怎么弄了

|

||||

例如

|

||||

```

|

||||

"英译中": {

|

||||

"Prefix": "请翻译成中文:\n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

```

|

||||

|

||||

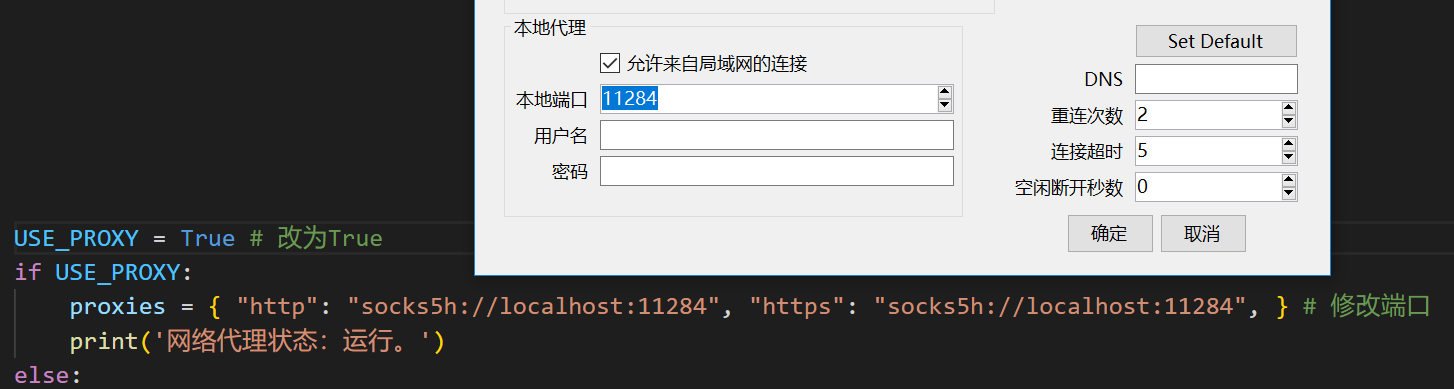

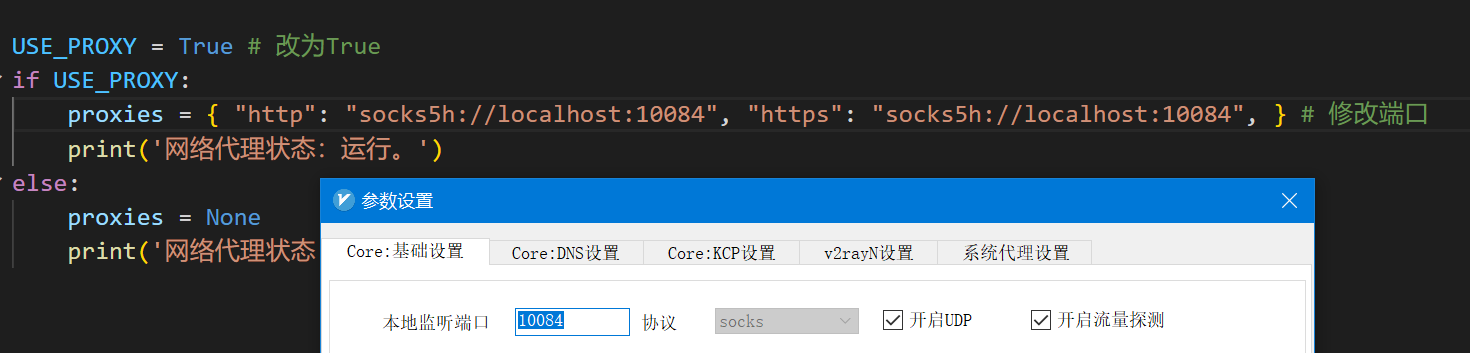

## 配置代理

|

||||

|

||||

在```config.py```中修改端口与代理软件对应

|

||||

|

||||

|

||||

|

||||

|

||||

## 参考项目

|

||||

```

|

||||

https://github.com/Python-Markdown/markdown

|

||||

|

||||

26

check_proxy.py

Normal file

26

check_proxy.py

Normal file

@ -0,0 +1,26 @@

|

||||

|

||||

"""

|

||||

我:用python的requests库查询本机ip地址所在地

|

||||

ChatGPT:

|

||||

"""

|

||||

def check_proxy(proxies):

|

||||

import requests

|

||||

proxies_https = proxies['https'] if proxies is not None else '无'

|

||||

try:

|

||||

response = requests.get("https://ipapi.co/json/", proxies=proxies, timeout=4)

|

||||

data = response.json()

|

||||

print(f'查询代理的地理位置,返回的结果是{data}')

|

||||

country = data['country_name']

|

||||

result = f"代理配置 {proxies_https}, 代理所在地:{country}"

|

||||

print(result)

|

||||

return result

|

||||

except:

|

||||

result = f"代理配置 {proxies_https}, 代理所在地查询超时,代理可能无效"

|

||||

print(result)

|

||||

return result

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

try: from config_private import proxies # 放自己的秘密如API和代理网址 os.path.exists('config_private.py')

|

||||

except: from config import proxies

|

||||

check_proxy(proxies)

|

||||

14

config.py

14

config.py

@ -1,11 +1,23 @@

|

||||

# my_api_key = "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"

|

||||

# API_KEY = "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" 此key无效

|

||||

API_KEY = "sk-此处填API秘钥"

|

||||

API_URL = "https://api.openai.com/v1/chat/completions"

|

||||

|

||||

# 改为True应用代理

|

||||

USE_PROXY = False

|

||||

if USE_PROXY:

|

||||

# 代理网络的地址,打开你的科学上网软件查看代理的协议(socks5/http)、地址(localhost)和端口(11284)

|

||||

proxies = { "http": "socks5h://localhost:11284", "https": "socks5h://localhost:11284", }

|

||||

print('网络代理状态:运行。')

|

||||

else:

|

||||

proxies = None

|

||||

print('网络代理状态:未配置。无代理状态下很可能无法访问。')

|

||||

|

||||

# 发送请求到OpenAI后,等待多久判定为超时

|

||||

TIMEOUT_SECONDS = 20

|

||||

|

||||

# 网页的端口, -1代表随机端口

|

||||

WEB_PORT = -1

|

||||

|

||||

# 检查一下是不是忘了改config

|

||||

if API_KEY == "sk-此处填API秘钥":

|

||||

assert False, "请在config文件中修改API密钥, 添加海外代理之后再运行"

|

||||

@ -10,19 +10,16 @@ def get_functionals():

|

||||

"英语学术润色": {

|

||||

"Prefix": "Below is a paragraph from an academic paper. Polish the writing to meet the academic style, \

|

||||

improve the spelling, grammar, clarity, concision and overall readability. When neccessary, rewrite the whole sentence. \

|

||||

Furthermore, list all modification and explain the reasons to do so in markdown table.\n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

"Color": "stop",

|

||||

Furthermore, list all modification and explain the reasons to do so in markdown table.\n\n", # 前言

|

||||

"Suffix": "", # 后语

|

||||

"Color": "stop", # 按钮颜色

|

||||

},

|

||||

"中文学术润色": {

|

||||

"Prefix": "作为一名中文学术论文写作改进助理,你的任务是改进所提供文本的拼写、语法、清晰、简洁和整体可读性,同时分解长句,减少重复,并提供改进建议。请只提供文本的更正版本,避免包括解释。请编辑以下文本:\n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

"查找语法错误": {

|

||||

"Prefix": "Below is a paragraph from an academic paper. Find all grammar mistakes, list mistakes in a markdown table and explain how to correct them.\n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

"中英互译": {

|

||||

@ -37,28 +34,23 @@ When providing translations, please use Chinese to explain each sentence’s ten

|

||||

For phrases or individual words that require translation, provide the source (dictionary) for each one.If asked to translate multiple phrases at once, \

|

||||

separate them using the | symbol.Always remember: You are an English-Chinese translator, \

|

||||

not a Chinese-Chinese translator or an English-English translator. Below is the text you need to translate: \n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

"Color": "stop",

|

||||

},

|

||||

"中译英": {

|

||||

"Prefix": "Please translate following sentence to English: \n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

"学术中译英": {

|

||||

"Prefix": "Please translate following sentence to English with academic writing, and provide some related authoritative examples: \n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

"英译中": {

|

||||

"Prefix": "请翻译成中文:\n\n",

|

||||

"Button": None,

|

||||

"Suffix": "",

|

||||

},

|

||||

"解释代码": {

|

||||

"Prefix": "请解释以下代码:\n```\n",

|

||||

"Button": None,

|

||||

"Suffix": "\n```\n",

|

||||

"Color": "stop",

|

||||

},

|

||||

|

||||

18

main.py

18

main.py

@ -4,6 +4,9 @@ import markdown, mdtex2html

|

||||

from predict import predict

|

||||

from show_math import convert as convert_math

|

||||

|

||||

try: from config_private import proxies, WEB_PORT # 放自己的秘密如API和代理网址 os.path.exists('config_private.py')

|

||||

except: from config import proxies, WEB_PORT

|

||||

|

||||

def find_free_port():

|

||||

import socket

|

||||

from contextlib import closing

|

||||

@ -11,16 +14,16 @@ def find_free_port():

|

||||

s.bind(('', 0))

|

||||

s.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

|

||||

return s.getsockname()[1]

|

||||

|

||||

PORT = find_free_port()

|

||||

|

||||

PORT = find_free_port() if WEB_PORT <= 0 else WEB_PORT

|

||||

|

||||

initial_prompt = "Serve me as a writing and programming assistant."

|

||||

title_html = """<h1 align="center">ChatGPT 学术优化</h1>"""

|

||||

|

||||

import logging

|

||||

os.makedirs('gpt_log', exist_ok=True)

|

||||

logging.basicConfig(filename='gpt_log/predict.log', level=logging.INFO)

|

||||

|

||||

logging.basicConfig(filename='gpt_log/chat_secrets.log', level=logging.INFO, encoding='utf-8')

|

||||

print('所有问询记录将自动保存在本地目录./gpt_log/chat_secrets.log,请注意自我隐私保护哦!')

|

||||

|

||||

from functional import get_functionals

|

||||

functional = get_functionals()

|

||||

@ -46,9 +49,6 @@ def markdown_convertion(txt):

|

||||

else:

|

||||

return markdown.markdown(txt,extensions=['fenced_code','tables'])

|

||||

|

||||

# math_config = {'mdx_math': {'enable_dollar_delimiter': True}}

|

||||

# markdown.markdown(txt, extensions=['fenced_code', 'tables', 'mdx_math'], extension_configs=math_config)

|

||||

|

||||

|

||||

def format_io(self,y):

|

||||

if y is None:

|

||||

@ -84,8 +84,8 @@ with gr.Blocks() as demo:

|

||||

for k in functional:

|

||||

variant = functional[k]["Color"] if "Color" in functional[k] else "secondary"

|

||||

functional[k]["Button"] = gr.Button(k, variant=variant)

|

||||

|

||||

statusDisplay = gr.Markdown("status: ready")

|

||||

from check_proxy import check_proxy

|

||||

statusDisplay = gr.Markdown(f"{check_proxy(proxies)}")

|

||||

systemPromptTxt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt).style(container=True)

|

||||

#inputs, top_p, temperature, top_k, repetition_penalty

|

||||

with gr.Accordion("arguments", open=False):

|

||||

|

||||

92

predict.py

92

predict.py

@ -4,23 +4,11 @@ import logging

|

||||

import traceback

|

||||

import requests

|

||||

import importlib

|

||||

import os

|

||||

|

||||

if os.path.exists('config_private.py'):

|

||||

# 放自己的秘密如API和代理网址

|

||||

from config_private import proxies, API_URL, API_KEY

|

||||

else:

|

||||

from config import proxies, API_URL, API_KEY

|

||||

|

||||

|

||||

|

||||

def compose_system(system_prompt):

|

||||

return {"role": "system", "content": system_prompt}

|

||||

|

||||

|

||||

def compose_user(user_input):

|

||||

return {"role": "user", "content": user_input}

|

||||

try: from config_private import proxies, API_URL, API_KEY, TIMEOUT_SECONDS # 放自己的秘密如API和代理网址 os.path.exists('config_private.py')

|

||||

except: from config import proxies, API_URL, API_KEY, TIMEOUT_SECONDS

|

||||

|

||||

timeout_bot_msg = 'Request timeout, network error. please check proxy settings in config.py.'

|

||||

|

||||

def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt='', retry=False,

|

||||

stream = True, additional_fn=None):

|

||||

@ -35,7 +23,7 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt=''

|

||||

raw_input = inputs

|

||||

logging.info(f'[raw_input] {raw_input}')

|

||||

chatbot.append((inputs, ""))

|

||||

yield chatbot, history, "Waiting"

|

||||

yield chatbot, history, "等待响应"

|

||||

|

||||

headers = {

|

||||

"Content-Type": "application/json",

|

||||

@ -46,29 +34,32 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt=''

|

||||

|

||||

print(f"chat_counter - {chat_counter}")

|

||||

|

||||

messages = [compose_system(system_prompt)]

|

||||

messages = [{"role": "system", "content": system_prompt}]

|

||||

if chat_counter:

|

||||

for index in range(0, 2*chat_counter, 2):

|

||||

d1 = {}

|

||||

d1["role"] = "user"

|

||||

d1["content"] = history[index]

|

||||

d2 = {}

|

||||

d2["role"] = "assistant"

|

||||

d2["content"] = history[index+1]

|

||||

if d1["content"] != "":

|

||||

if d2["content"] != "" or retry:

|

||||

messages.append(d1)

|

||||

messages.append(d2)

|

||||

what_i_have_asked = {}

|

||||

what_i_have_asked["role"] = "user"

|

||||

what_i_have_asked["content"] = history[index]

|

||||

what_gpt_answer = {}

|

||||

what_gpt_answer["role"] = "assistant"

|

||||

what_gpt_answer["content"] = history[index+1]

|

||||

if what_i_have_asked["content"] != "":

|

||||

if not (what_gpt_answer["content"] != "" or retry): continue

|

||||

if what_gpt_answer["content"] == timeout_bot_msg: continue

|

||||

messages.append(what_i_have_asked)

|

||||

messages.append(what_gpt_answer)

|

||||

else:

|

||||

messages[-1]['content'] = d2['content']

|

||||

messages[-1]['content'] = what_gpt_answer['content']

|

||||

|

||||

if retry and chat_counter:

|

||||

messages.pop()

|

||||

else:

|

||||

temp3 = {}

|

||||

temp3["role"] = "user"

|

||||

temp3["content"] = inputs

|

||||

messages.append(temp3)

|

||||

what_i_ask_now = {}

|

||||

what_i_ask_now["role"] = "user"

|

||||

what_i_ask_now["content"] = inputs

|

||||

messages.append(what_i_ask_now)

|

||||

chat_counter += 1

|

||||

|

||||

# messages

|

||||

payload = {

|

||||

"model": "gpt-3.5-turbo",

|

||||

@ -87,10 +78,10 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt=''

|

||||

try:

|

||||

# make a POST request to the API endpoint using the requests.post method, passing in stream=True

|

||||

response = requests.post(API_URL, headers=headers, proxies=proxies,

|

||||

json=payload, stream=True, timeout=15)

|

||||

json=payload, stream=True, timeout=TIMEOUT_SECONDS)

|

||||

except:

|

||||

chatbot[-1] = ((chatbot[-1][0], 'Requests Timeout, Network Error.'))

|

||||

yield chatbot, history, "Requests Timeout"

|

||||

chatbot[-1] = ((chatbot[-1][0], timeout_bot_msg))

|

||||

yield chatbot, history, "请求超时"

|

||||

raise TimeoutError

|

||||

|

||||

token_counter = 0

|

||||

@ -101,8 +92,6 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt=''

|

||||

stream_response = response.iter_lines()

|

||||

while True:

|

||||

chunk = next(stream_response)

|

||||

# print(chunk)

|

||||

|

||||

if chunk == b'data: [DONE]':

|

||||

break

|

||||

|

||||

@ -119,16 +108,21 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt=''

|

||||

break

|

||||

except Exception as e:

|

||||

traceback.print_exc()

|

||||

print(chunk.decode())

|

||||

|

||||

chunkjson = json.loads(chunk.decode()[6:])

|

||||

status_text = f"id: {chunkjson['id']}, finish_reason: {chunkjson['choices'][0]['finish_reason']}"

|

||||

partial_words = partial_words + \

|

||||

json.loads(chunk.decode()[6:])[

|

||||

'choices'][0]["delta"]["content"]

|

||||

if token_counter == 0:

|

||||

history.append(" " + partial_words)

|

||||

else:

|

||||

history[-1] = partial_words

|

||||

chatbot[-1] = (history[-2], history[-1])

|

||||

token_counter += 1

|

||||

yield chatbot, history, status_text

|

||||

try:

|

||||

chunkjson = json.loads(chunk.decode()[6:])

|

||||

status_text = f"finish_reason: {chunkjson['choices'][0]['finish_reason']}"

|

||||

partial_words = partial_words + json.loads(chunk.decode()[6:])['choices'][0]["delta"]["content"]

|

||||

if token_counter == 0:

|

||||

history.append(" " + partial_words)

|

||||

else:

|

||||

history[-1] = partial_words

|

||||

chatbot[-1] = (history[-2], history[-1])

|

||||

token_counter += 1

|

||||

yield chatbot, history, status_text

|

||||

|

||||

except Exception as e:

|

||||

traceback.print_exc()

|

||||

print(chunk.decode())

|

||||

yield chatbot, history, "Json解析不合常规"

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user